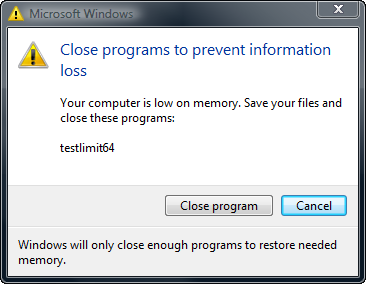

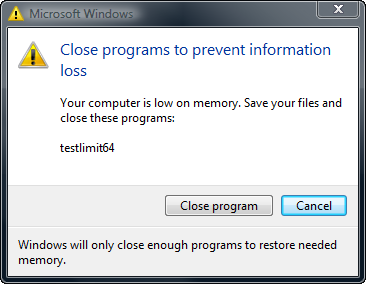

If there were no such thing as virtual memory, then once you filled up the available RAM your computer would have to say, “Sorry, you can not load any more applications. Please close another application to load a new one.”

With virtual memory, what the computer can do is look at RAM for areas that have not been used recently and copy them onto the hard disk. This frees up space in RAM to load the new application.

The read/write speed of a hard drive is much slower than RAM, and the technology of a hard drive is not geared toward accessing small pieces of data at a time. If your system has to rely too heavily on virtual memory, you will notice a significant performance drop. The key is to have enough RAM to handle everything you tend to work on simultaneously then, the only time you “feel” the slowness of virtual memory is is when there’s a slight pause when you’re changing tasks. When that’s the case, virtual memory is perfect.

When it is not the case, the operating system has to constantly swap information back and forth between RAM and the hard disk. This is called thrashing, and it can make your computer feel incredibly slow.

The area of the hard disk that stores the RAM image is called a page file. It holds pages of RAM on the hard disk, and the operating system moves data back and forth between the page file and RAM. On a Windows machine, page files have a .SWP extension

On Linux it is a separate partition (i.e., a logically independent section of a HDD) that is set up during installation of the operating system and which is referred to as the swap partition.

A common recommendation is to set the page-file size at 1.5-times the system’s RAM. In reality, the more RAM a system has, the less it requires page files. You should base your page-file size on the maximum amount of memory your system is committing. Your page-file size should equal your system’s peak commit value (which covers the unlikely situation in which all the committed pages are written to the disk-based page files).

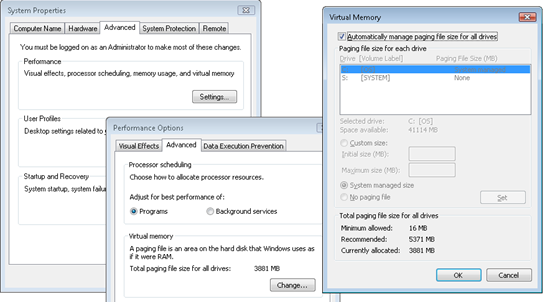

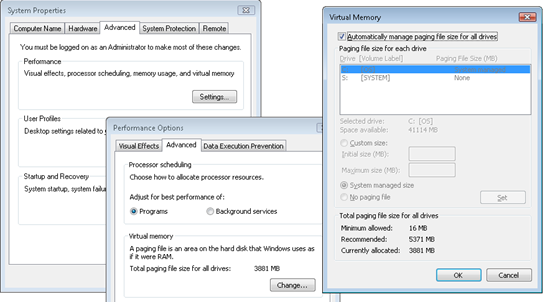

Locating the Page File (Windows)

Paging file configuration is in the System properties, which you can get to by typing “sysdm.cpl” into the Run dialog, clicking on the Advanced tab, clicking on the Performance Options button, clicking on the Advanced tab (this is really advanced), and then clicking on the Change button:

You’ll notice that the default configuration is for Windows to automatically manage the page file size.

Finding Committed Memory

In Windows XP and Server 2003, you can find the peak-commit value under the Task Manager Performance tab

However, this option wasn’t included in Windows Server 2008 and Vista. To determine Server 2008 and Vista peak-commit values, you have two options:

- Download Process Explorer from the Microsoft “Process Explorer v11.20” web page. Open the .zip file and double click procexp.exe. Click View on the toolbar and select System Information. Under Commit Charge (K), find the Peak value

- Use Performance Monitor to log the Memory – Committed Bytes counter, and review the log to find the Maximum value.

Make sure you run the server with all of its expected workloads to ensure it’s using the maximum amount of memory while you’re monitoring

Maximum Page File Sizes

Windows XP/2003

When that option is set on Windows XP and Server 2003, Windows creates a single paging file that’s minimum size is 1.5 times RAM if RAM is less than 1GB, and 3 times RAM if it’s greater than 1GB, and that has a maximum size that’s three times RAM.

Windows Vista/2008

On Windows Vista and Server 2008, the minimum is intended to be large enough to hold a kernel-memory crash dump and is RAM plus 300MB or 1GB, whichever is larger. The maximum is either three times the size of RAM or 4GB, whichever is larger.

Limits

Limits related to virtual memory are the maximum size and number of paging files supported by Windows.

32-bit Windows has a maximum paging file size of 16TB (4GB if you for some reason run in non-PAE mode) (Physical Address Extension (PAE) is a feature to allow (32-bit) x86 processors to access a physical address space (including random access memory and memory mapped devices) larger than 4 gigabytes.)

64-bit Windows can having paging files that are up to 16TB on x64 and 32TB on IA64 and 3 For all versions, Windows supports up to 16 paging files, where each must be on a separate volume.

Some feel having no paging file results in better performance, but in general, having a paging file means Windows can write pages on the modified list (which represent pages that aren’t being accessed actively but have not been saved to disk) out to the paging file, thus making that memory available for more useful purposes (processes or file cache). So while there may be some workloads that perform better with no paging file, in general having one will mean more usable memory being available to the system (never mind that Windows won’t be able to write kernel crash dumps without a paging file sized large enough to hold them).

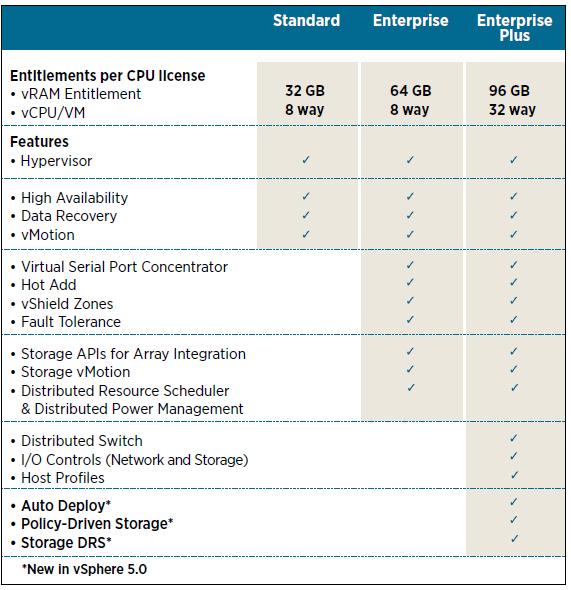

VMware and Page Files

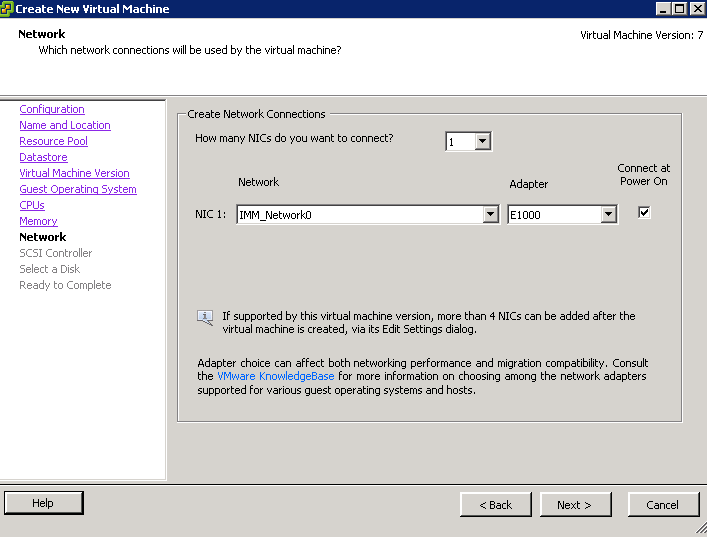

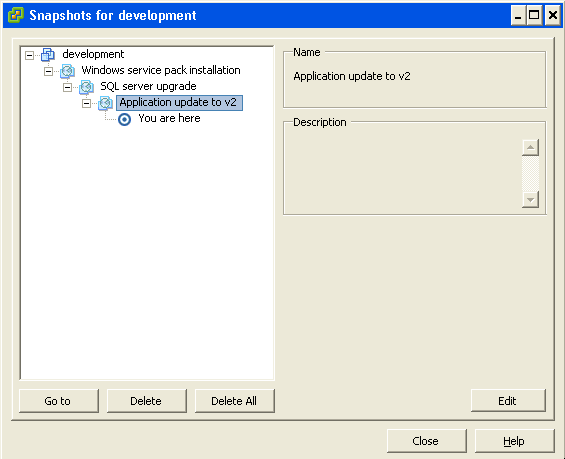

When creating VM’s in VMware either Linux or Windows, VMware by default makes the Page File the same size as the assigned Memory. A 1:1 Mapping

E.g 60GB Disk +32GB Page File = 92GB Total Storage taken

This came up in a meeting we had to discuss why some of our VM’s which were assigned 255GB memory were taking up so much storage space!!!

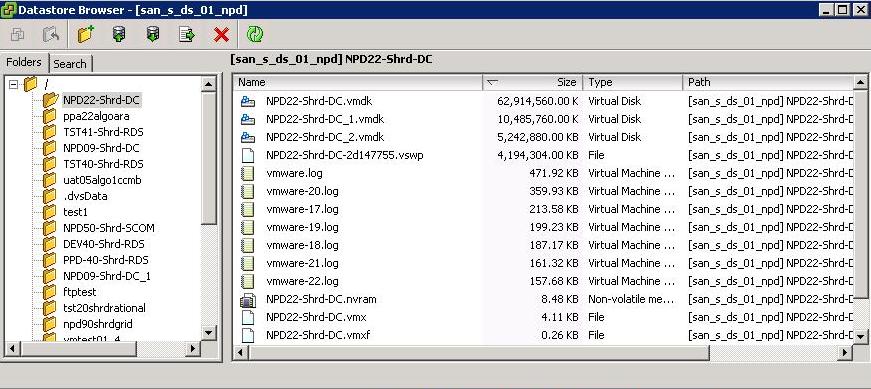

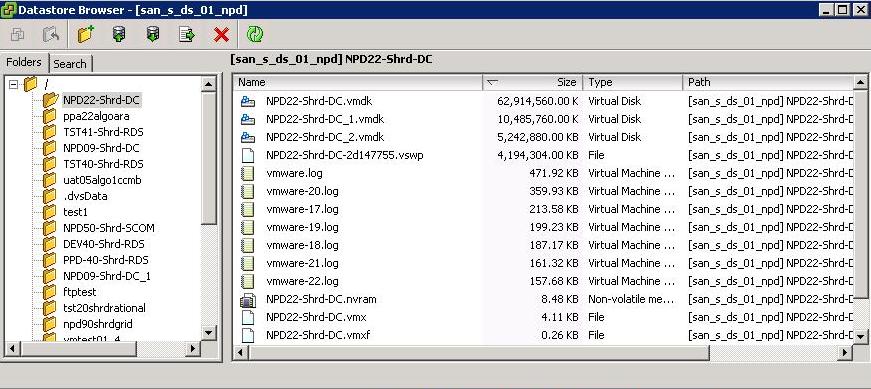

The file on VMware for the swap is called VM-NAME.vswp if you have a look in the Datastore Browser for a VM

From a Forum

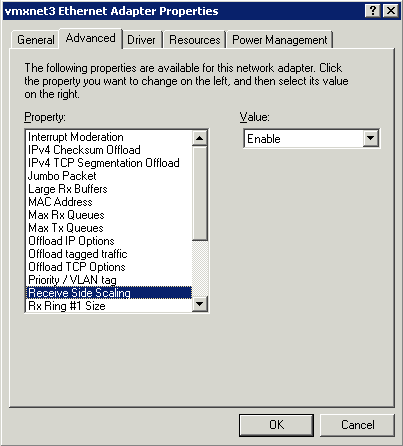

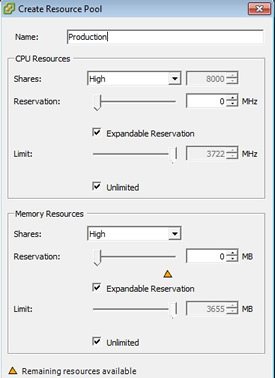

*.vswp file – This is the VM swap file (earlier ESX versions had a per host swap file) and is created to allow for memory overcommitment on a ESX server. The file is created when a VM is powered on and deleted when it is powered off. By default when you create a VM the memory reservation is set to zero, meaning no memory is reserved for the VM and it can potentially be 100% overcommitted. As a result of this a vswp file is created equal to the amount of memory that the VM is assigned minus the memory reservation that is configured for the VM. So a VM that is configured with 2GB of memory will create a 2GB vswp file when it is powered on, if you set a memory reservation for 1GB, then it will only create a 1GB vswp file. If you specify a 2GB reservation then it creates a 0 byte file that it does not use. When you do specify a memory reservation then physical RAM from the host will be reserved for the VM and not usable by any other VM’s on that host. A VM will not use it vswp file as long as physical RAM is available on the host. Once all physical RAM is used on the host by all its VM’s and it becomes overcommitted then VM’s start to use their vswp files instead of physical memory. Since the vswp file is a disk file it will effect the performance of the VM when this happens