The HA Question?

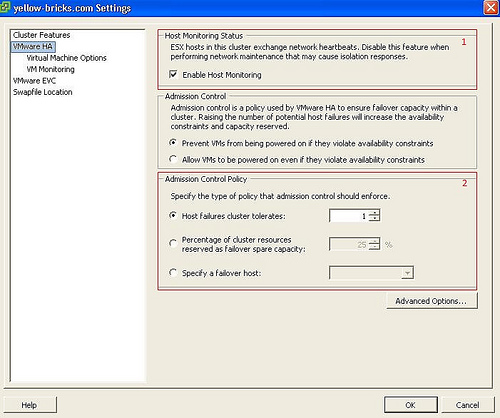

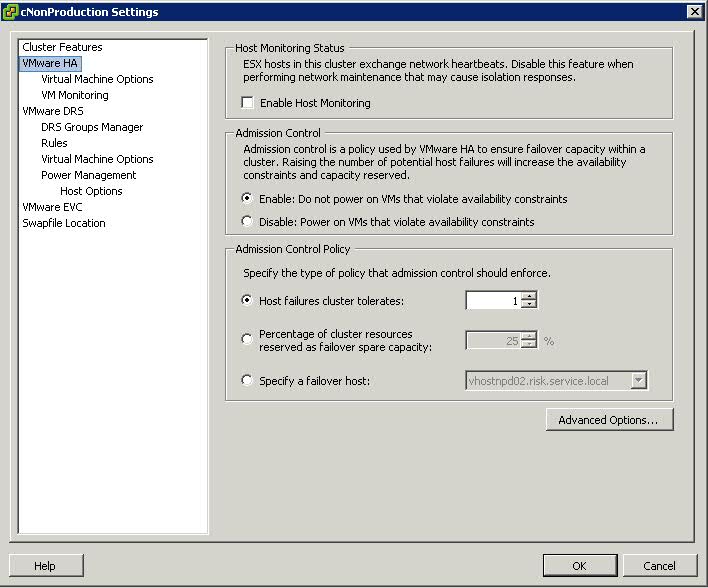

We were asked what actually happens to the hosts and VMs in vSphere 5.5 if an isolation event was triggered and we completely lost our host Management Network. (Which I have seen happen in the past!) I have written several blog posts about HA in the HA Category so I am not going to go back over these. I am just going to focus on this question with our settings which are set as below.

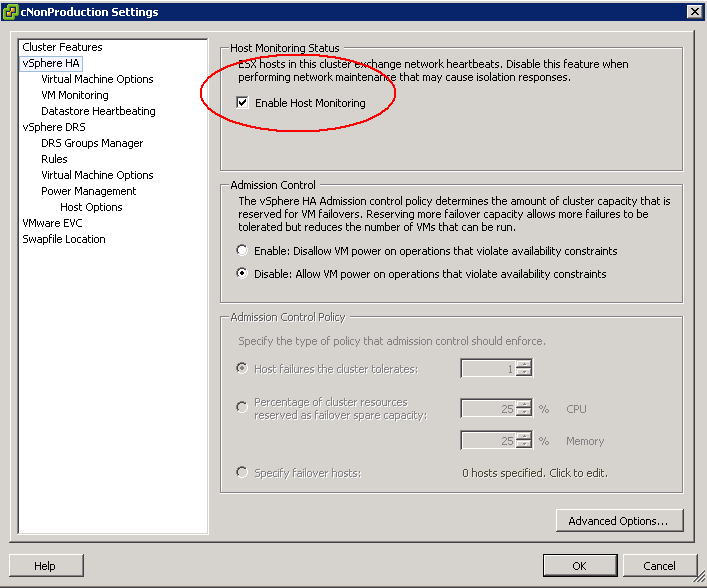

It is important to note that the restarting by VMware HA of virtual machines on other hosts in the cluster in the event of a host isolation or host failure is dependent on the “host monitoring” setting. If host monitoring is disabled, the restart of virtual machines on other hosts following a host failure or isolation is also disabled

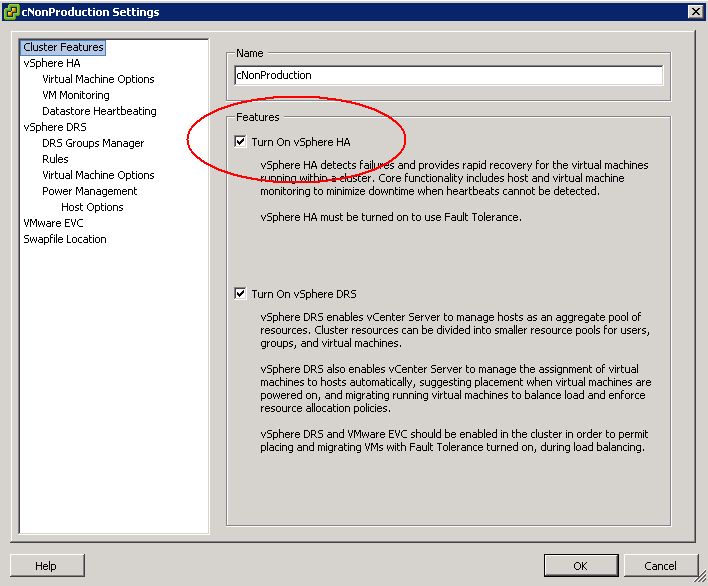

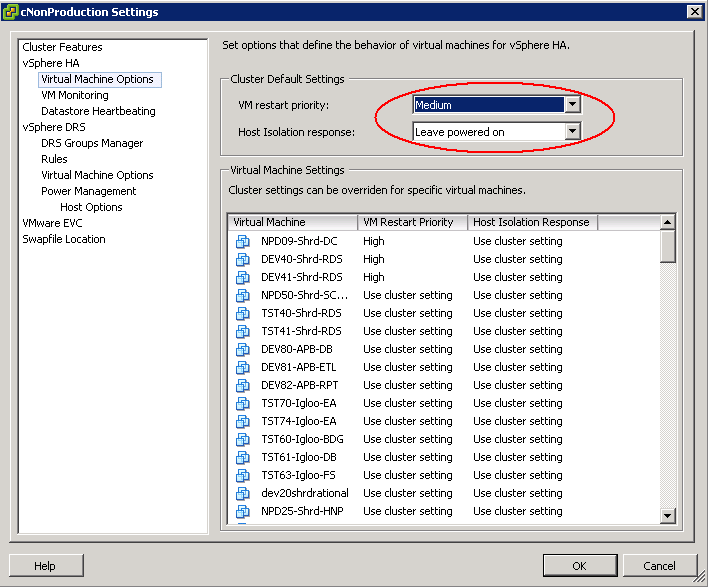

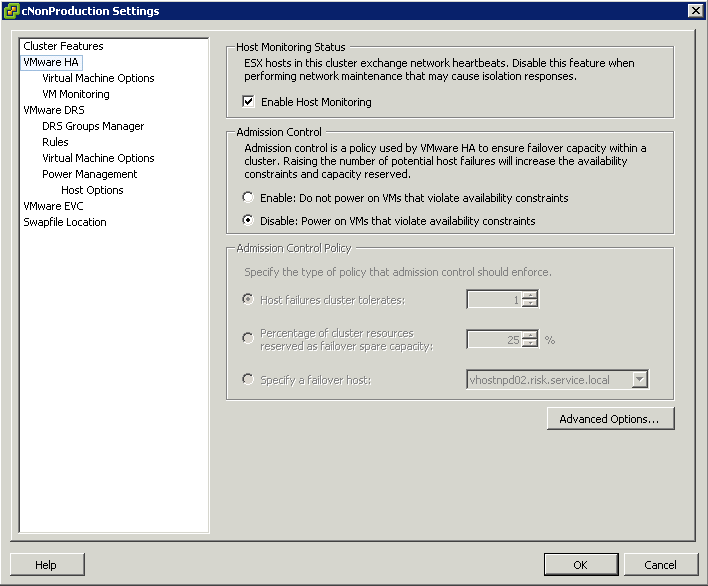

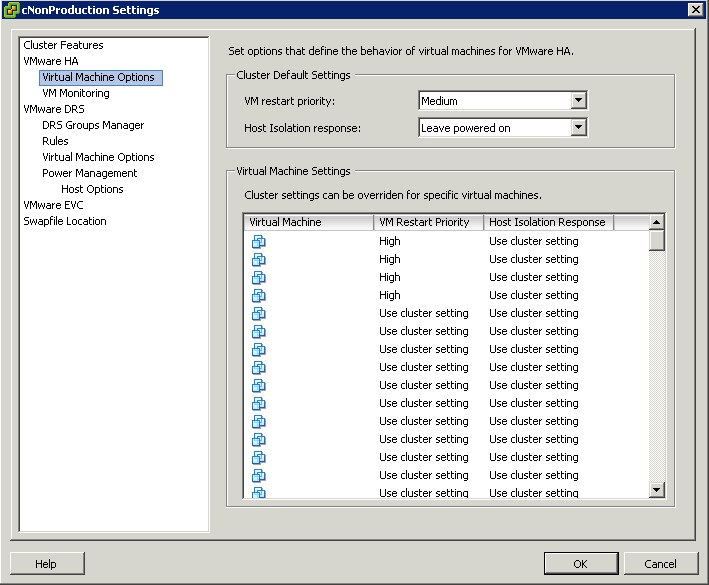

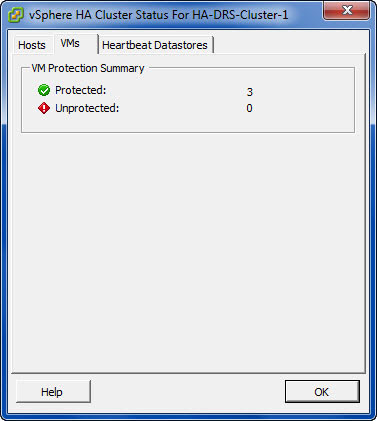

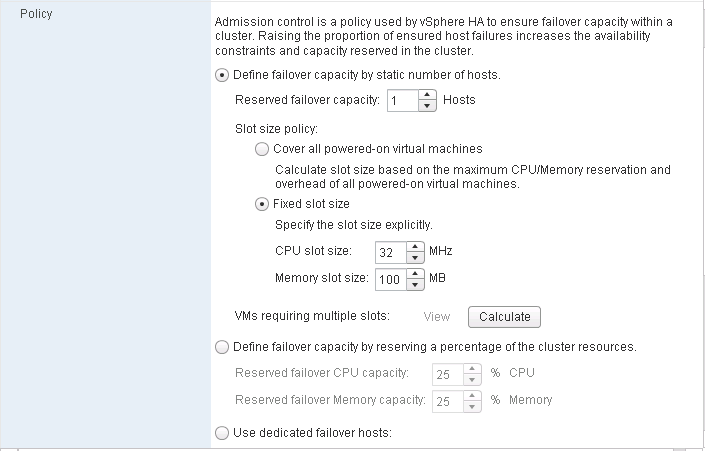

On our Non Production Cluster and our Production Cluster we have HA enabled and Enable Host Monitoring turned on with Leave Powered On as our default

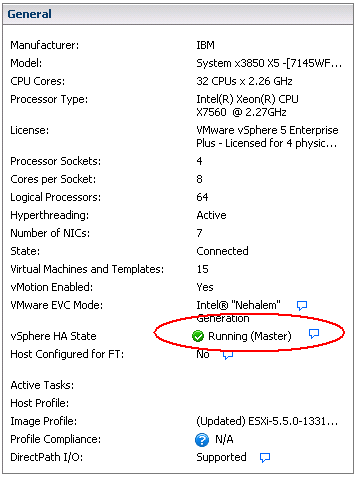

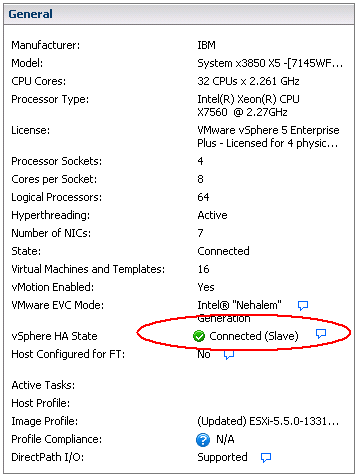

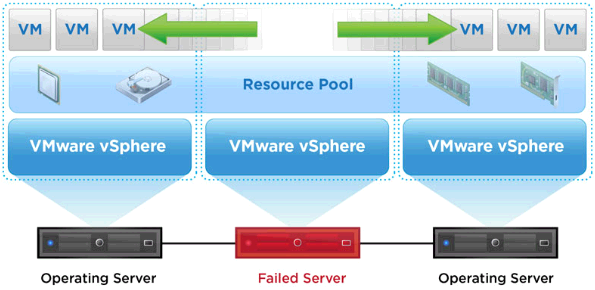

The vSphere architecture comprises of Master and Slave HA agents. Except during network partitions there is one master in the cluster. A master agent is responsible for monitoring the health of virtual machines and restarting any that fail. The Slaves are responsible for sending information to the master and restarting virtual machines as instructed by the master.

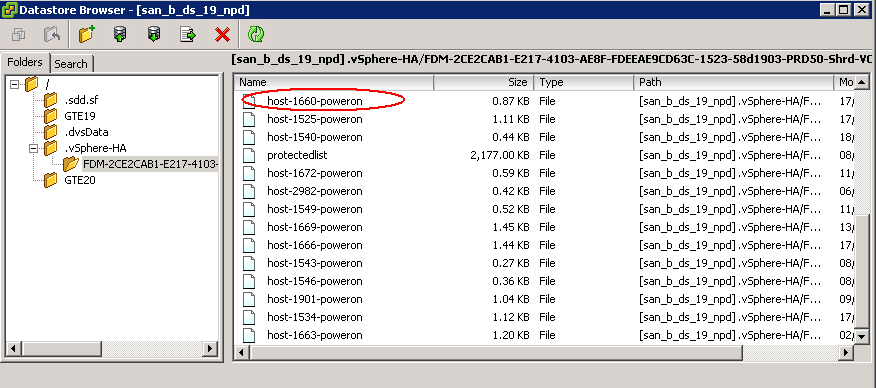

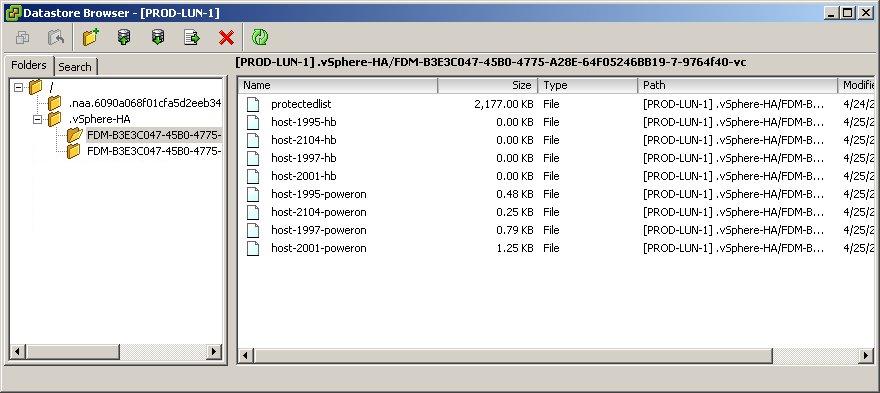

When a HA cluster is created it will begin by electing a master which will try and gain ownership of all the datastores it can directly access or by proxying requests to one of the slaves using the management network. It does this by locking a file called protectedlist that is stored on the datstores in an existing cluster. The master will also try and take ownership of any datastores that it discovers on the way and will periodically try any datatstores it could not access previously.

The master uses the protectlist file to store the inventory and keeps track of the virtual machines protected by HA. It then distributes the inventory across all the datastores

There is also a file called poweron located on a shared datastore which contains a list of powered on virtual machines. This file is used by slaves to inform the master that they are isolated by the top line of the file containing a 0 or 1 with 1 meaning isolated

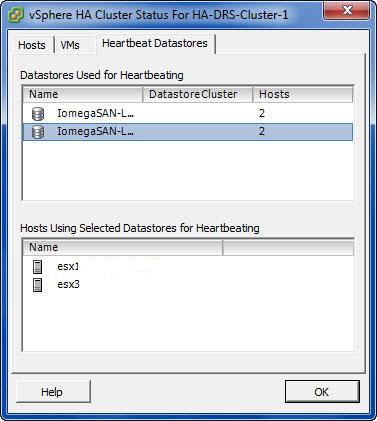

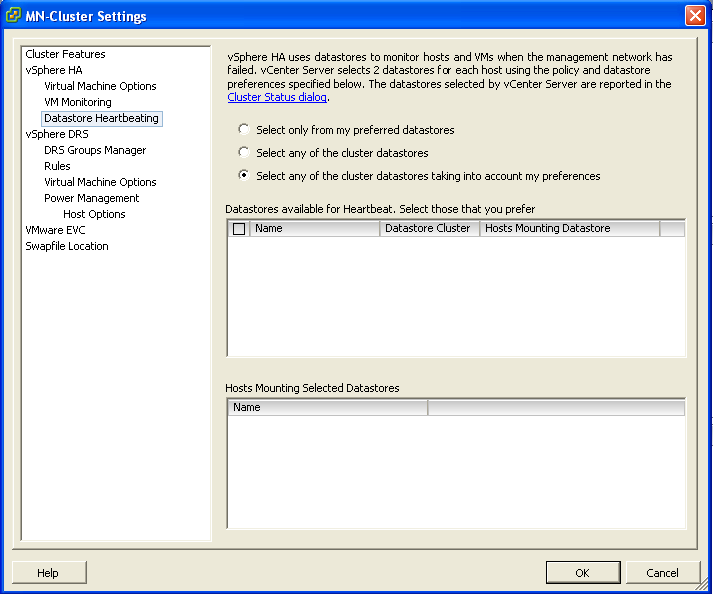

Datastore Heartbeating

In vSphere versions prior to 5.x, machine restarts were always attempted, even if it was only the Management network which went down and the rest of the VM networks were running fine. This was not a desirable situation. VMware have introduced the concept of Datastore heartbeating which adds much more resiliency and false positives which resulted in VMs restarting unnecessarily.

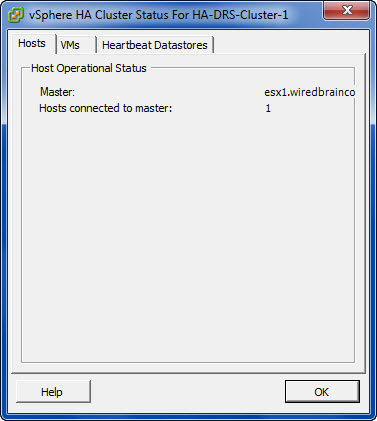

Datastore Heartbeating is used when a master has lost network connectivity with a slave. The Datastore Heartbeating mechanism is then used to validate if a host has failed or is isolated/network partitioned which is validated through the poweron file as mentioned previously. By default HA picks 2 heartbeat datastores. To see which datastores, click on the vCenter name and select Cluster Status

Isolation and Network Partitioning

A host is considered to be either isolated or network partitioned when it loses network access to a master but has not completely failed.

Isolation

- A host is not receiving any heartbeats from the master

- A host is not receiving any election traffic

- A host cannot ping the isolation address

- Virtual machines may be restarted depending on the isolation response

- A VM will only be shut down or powered off when the isolated host knows there is a master out there that has taken ownership for the VM or when the isolated host loses access to the home datastore of the VM

Network Partitioning

- A host is not receiving any heartbeats from the master

- A host is receiving election traffic

- An election process will take place and the state reported to vCenter and virtual machines may be restarted depending on the isolation response

What happens if?

- The Master fails

If the slaves have not received any network heartbeats from the master, then the slaves will try and elect a new master. The new master will gather the required information and restart the VMs. The Datastore lock will expire and a newly elected master will relock the file if it has access to the Datastore

- A Slave fails

The master along with monitoring the slave hosts also receives heartbeats from the slaves every second. If a slave fails or become isolated, the master will check for connectivity for 15 seconds then it will see if the host is still heartbeating to the datastore. Next it will try and ping the management gateway. If the datastore and management gateway prove negative then the host will be declared failed and determine which VMs need to be restarted and will try and distribute them fairly across the remaining hosts

- Power Outage

If there is a Power Outage and all hosts power down suddenly then as soon as the power for the hosts returned, an election process will be kicked off and a master will be elected. The Master reads protected list which contains all VMs which are protected by HA and then the Master initiates restarts for those VMs which are listed as protected but not running

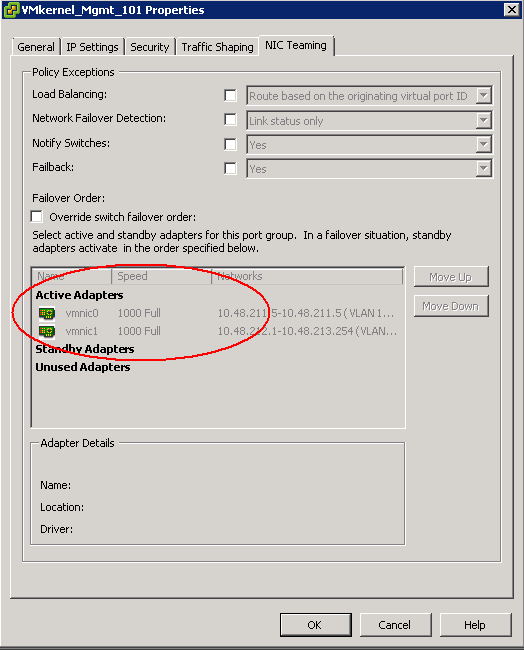

- Complete Management Network failure

First of all it’s a very rare scenario where the Management Network becomes unavailable at the same time from all the running Host’s in the Cluster. VMware recommend to have redundant vmnics configured for the Host and each vmkernel management vmnic going into a different management switch for full redundancy. See pic below.

If all the ESXi Hosts lose the Management Network then the Master and the Slaves will remain at the same state as there will be no election happening because the FDM agents communicate through the Management Network. Because the VMs will be accessible on the Datastores which the master knows by reading the protectedlist file and the poweron file on the Datastores, it will know if there is a complete failure of the Management network or a failure of itself or a slave or an isolation/network partition event. Each host will ping the isolation address and declare itself isolated. It will then trigger the isolation response which is to leave VMs powered on

A host remains isolated until it observes HA network traffic, like for instance election messages or it starts getting a response from an isolation address. Meaning that as long as the host is in an “isolated state” it will continue to validate its isolation by pinging the isolation address. As soon as the isolation address responds it will initiate an election process or join an existing election process and the cluster will return to a normal state.

Useful Link

Thanks to Iwan Rahabok 🙂

http://virtual-red-dot.blogspot.co.uk/2012/02/vsphere-ha-isolation-partition-and.html