Appropriate BIOS and firmware settings

- Make sure you have the most up to date firmware for your Servers including all 3rd party cards

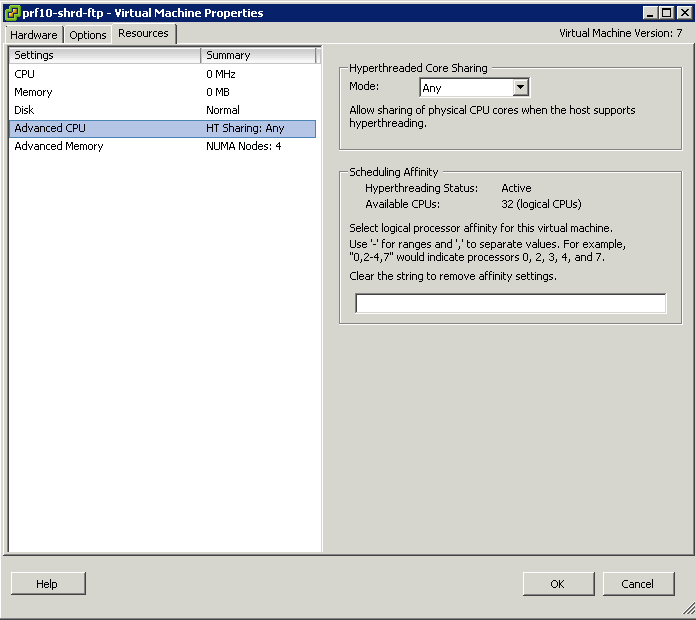

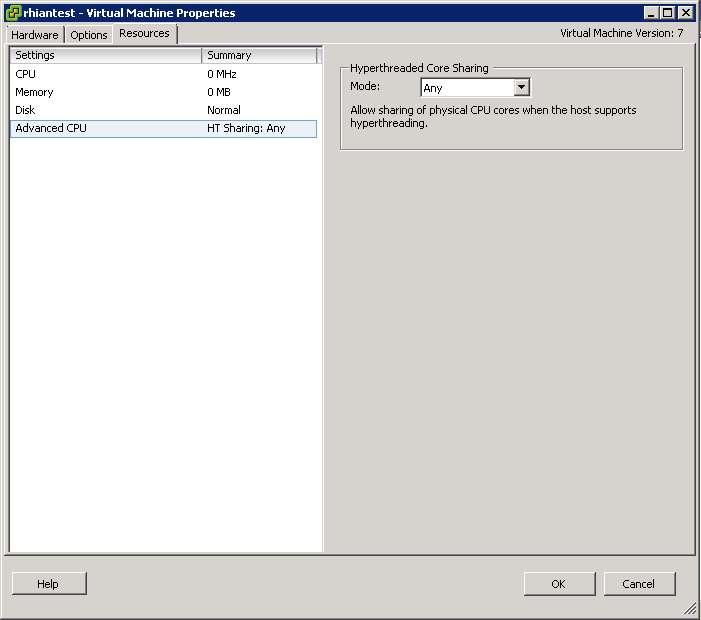

- Enable Hyperthreading. Note, you cannot enable hyperthreading on a system with great then 32 physical cores because of the logical limit of 64 CPUs

- Make sure the BIOS is set to enable all populated processor sockets and to enable all cores in each socket.

- Enable “Turbo Boost” in the BIOS if your processors support it

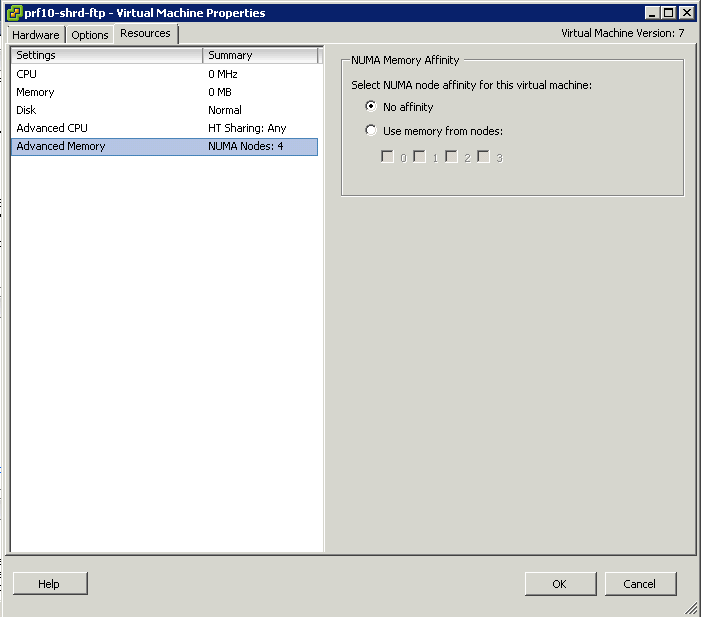

- Some NUMA-capable systems provide an option in the BIOS to disable NUMA by enabling node interleaving. In most cases you will get the best performance by disabling node interleaving (in other words, leaving NUMA enabled) These technologies automatically trap sensitive calls, eliminating the overhead required to

do so in software. This allows the use of a hardware virtualization (HV) virtual machine monitor (VMM) as opposed to a binary translation (BT) VMM.

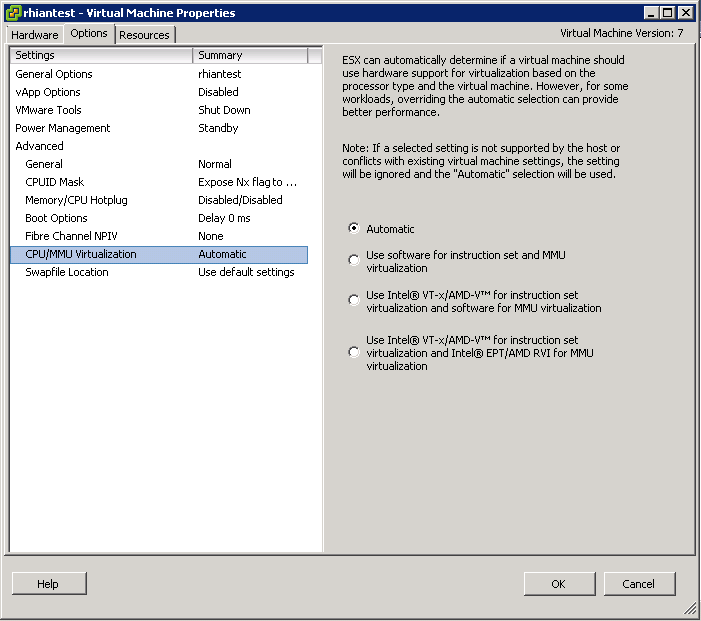

- Hardware-Assisted CPU Virtualization (Intel VT-x and AMD AMD-V) The first generation of hardware virtualization assistance, VT-x from Intel and AMD-V from AMD, became available in 2006. These technologies automatically trap sensitive calls, eliminating the overhead required to do so in software. This allows the use of a hardware virtualization (HV) virtual machine monitor (VMM) as opposed to a binary translation (BT) VMM.

- Hardware-Assisted MMU Virtualization (Intel EPT and AMD RVI) Some recent processors also include a new feature that addresses the overheads due to memory management unit (MMU) virtualization by providing hardware support to virtualize the MMU. ESX 4.0 supports this feature in both AMD processors, where it is called rapid virtualization indexing (RVI) or nested page tables (NPT), and in Intel processors, where it is called extended page tables (EPT).

- Cache prefetching mechanisms (sometimes called DPL Prefetch, Hardware Prefetcher, L2 Streaming Prefetch, or Adjacent Cache Line Prefetch) usually help performance, especially when memory access patterns are regular. When running applications that access memory randomly, however, disabling these mechanisms might result in improved performance.

- ESX 4.0 supports Enhanced Intel SpeedStep® and Enhanced AMD PowerNow!™ CPU power management technologies that can save power when a host is not fully utilized. However because these and other power-saving technologies can reduce performance in some situations, you should consider disabling them when performance considerations outweigh power considerations.

- Disable C1E halt state in the BIOS.

- Disable any other power-saving mode in the BIOS.

- Disable any unneeded devices from the BIOS, such as serial and USB ports.

Identify appropriate driver revisions required for optimal ESXi host performance

Check out the VMware HCL and (or) VMware KB 2030818 for recommended drivers and firmware for different vSphere versions.