General Rules for Processor Scheduling

- ESX(i) schedules VMs onto and off of processors as needed

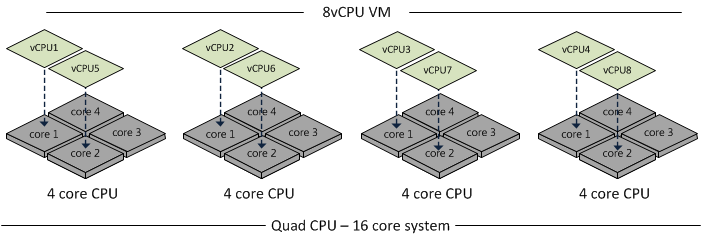

- Whenever a VM is scheduled to a processor, all of the cores must be available for the VM to be scheduled or the VM cannot be scheduled at all

- If a VM cannot be scheduled to a prcoessor when it needs access, VM performance can suffer a great deal.

- When VMs are ready for a processor but are unable to be scheduled, this creates what VMware calls the CPU %Ready values

- CPU %Ready manifests itself as a utilisation issue but is actually a scheduling issue

- VMware attempts to schedule VMs on the same core over and over again and sometimes it has to move to another processor. Processor caches contain certain information that allows the OS to perform better. If the VM is actually moved across sockets and the cache isn’t shared, then it needs to be loaded with this new info.

- Maintain consistent Guest OS configurations

Scheduling Issues

- Mixing Single, dual and quad core vCPUs VMs on the same ESX(i) server can create major scheduling problems. This is especially true when the ESX Server has low core densities or when the ESX servers average moderate to high utilisation levels

- Where possible reduce VMs to single vCPU VMs except if they host an application which requires multiple CPUs or if you find reducing on to one core is not possible to due to high utilisation on both cores on that particular VM

- Keep an eye on scheduling issues especially CPU% Ready. More than 2% indicates processor scheduling issues

Performance enhancers for vSphere

- Non scheduling of idle processors

vSphere has the ability to skip scheduling of idle processors. For example if a quad processor VM has activity on only 1 core, vSphere has the ability to schedule only that single core sometimes. A multi threaded app will likely be using most or all of its cores most of the time. If a VM has CPUs that are sitting idle a lot, it should be reviewed whether this VM actually needs the multiple processors

If your application is not multi-threaded, you gain nothing by adding cores to the VM and make it more difficult to schedule

2. Processor Skew

Guest OSs expect to see progress on all of their cores all of the time. vSphere has the ability to allow a small amount of skew whereby the processors need not be completely in sync but this has to be kept within reasonable limits

For a detailed description of how ESX(i) schedules VMs to processors please read

http://www.vmware.com/files/pdf/perf-vsphere-cpu_scheduler.pdf