What is VMware Tanzu?

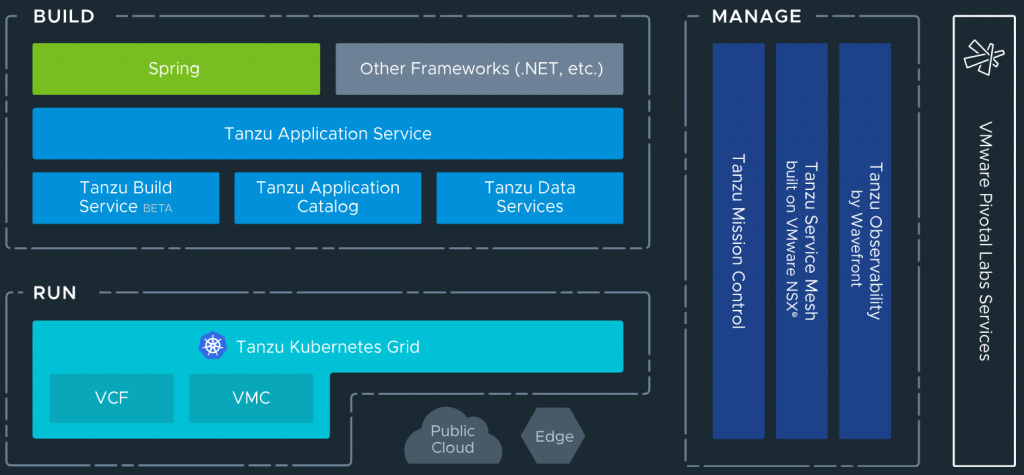

VMware Tanzu is a portfolio of services for modernizing Kubernetes controlled container-based applications and infrastructure.

- Application services: Modern application platforms

- Build service: Container creation and management. Heptio, Bitnami and Pivotal come under this category. Bitnami packages and delivers 180+ Kubernetes applications, ready-to-run virtual machines and cloud images. Pivotal controls one of the most popular application frameworks, “Spring”, and offers customers the Pivotal Application Service recently announcing that PAS and its components, Pivotal Build Service and Pivotal Function Service are being evolved to run on Kubernetes.

- Application catalogue: Production ready, open source containers

- Data services: Cloud native data and messaging including Gemfire, RabbitMQ and SQL

- Kubernetes grid. Enterprise ready runtime

- Mission Control: Centralised cluster management

- Observability: Modern app monitoring and analytics

- Service mesh: App wide networking and control

VMware Tanzu services

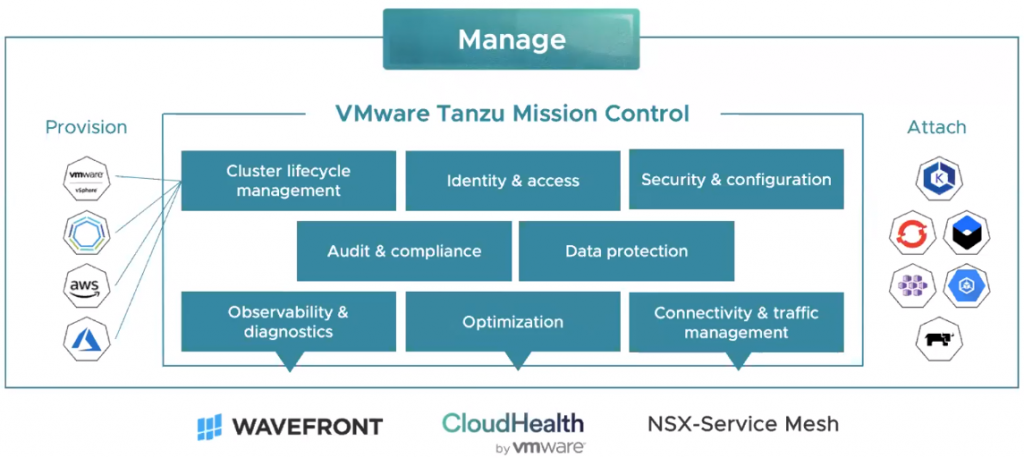

What is Tanzu Mission Control?

VMware Tanzu Mission Control is a SaaS based control plane which allows customers to manage all the Kubernetes clusters, across vSphere, VMware PKS, public clouds, managed services, packaged distributions from a central single point of control and single pane of glass. This will allow applying policies for access, quotas, back-up, security and more to individual clusters or groups of clusters. It will support a wide array of operations such as life-cycle management including initial deployment, upgrade, scale and delete. This will be achieved via the open source Cluster API project.

As these environments evolve, there can be a proliferation of containers and applications so how do you keep this all under control allowing the developers to do their jobs and operations to keep the infrastructure under control to help with the following

- Map enterprise identity to Kubernetes RBAC across clusters

- Define policies once and push them across clusters

- Manage cluster lifecycle consistently

- Unified view of cluster metrics, logs and data

- Cross cluster cloud data

- Automated policy controlled cross cluster traffic

- Monitor Kubernetes costs

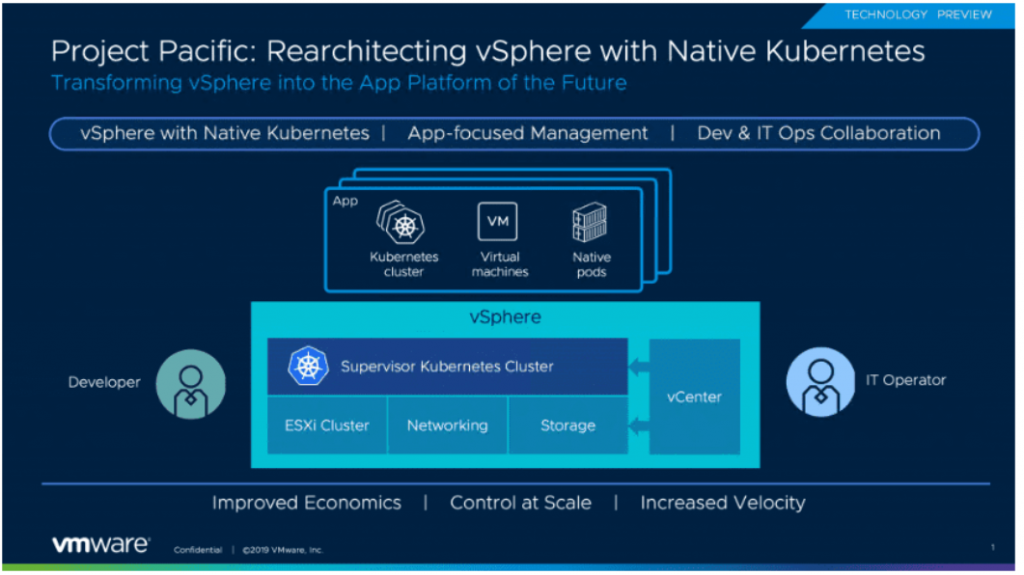

What is Project Pacific?

Project Pacific is an initiative to embed Kubernetes into the control plane of vSphere for managing Kubernetes workloads on ESXi hosts. The integration of Kubernetes and vSphere will happen at the API and UI layers, but also the core virtualization layer where ESXi will run Kubernetes natively. A developer will see and utilise Project Pacific as a Kubernetes cluster and an IT admin will still see the normal vSphere infrastructure.T

The control plane will allow the deployment of

- Virtual Machines and cluster of VMs

- Kubernetes Clusters

- Pods

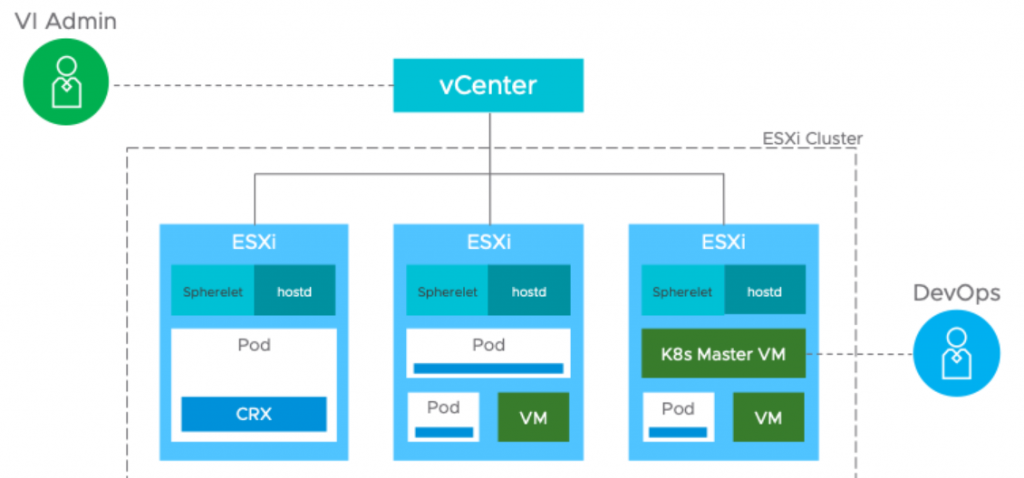

The Supervisor cluster

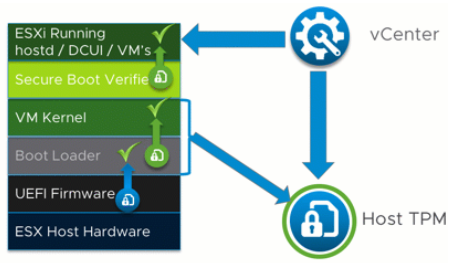

The control plane is made up of a supervisor cluster using ESXi as the worker nodes instead of Linux. This is carried out by by integrating a Spherelet directly into ESXi. The Spherelet doesn’t run in a VM, it runs directly on ESXi. This allows workloads or pods to be deployed and run natively in the hypervisor, alongside normal Virtual Machine workloads. A Supervisor Cluster can be thought of as a group of ESXi hosts running virtual machine workloads, while at the same time acting as Kubernetes worker nodes and running container workloads.

vSphere Native Pods

The supervisor cluster allows workloads or pods to be deployed. Native pods are actually containers that comply with the Kubernetes Pod specification. This functionality is provided by a new container runtime built into ESXi called CRX. CRX optimises the Linux kernel and hypervisor and removes some of the traditional heavy config of a virtual machine enabling the binary image and executable code to be quickly loaded and booted. The Spherelet ensures containers are running in pods. Pods are created on a network internal to the Kubernetes nodes. By default, pods cannot talk to each other across the cluster of nodes unless a Service is created. A Service in Kubernetes allows a group of pods to be exposed by a common IP address, helping define network routing and load balancing policies without having to understand the IP addressing of individual pods

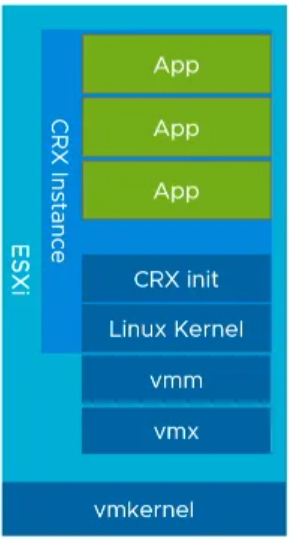

CRX – Container runtime for ESXi

Each virtual machine has a vmm (virtual machine manager) and vmx (virtual machine executive) process that handles all of the other subprocesses to support running a VM. To implement Kubernetes, VMware introduced a new process called CRX (the container runtime executive) which manages the processes associated with a Kubernetes Pod. Each ESXi server also runs the equivalent of hostd (the ESXi scheduler) called spherelet, analogous to the kubelet in standard Kubernetes.

A CRX instance is a specific form of VM which is packaged with ESXi and provides a Linux Application Binary Interface (ABI) through a very isolated environment. VMware supply the Linux Kernel image used by CRX instances. When a CRX instance is brought up, ESXi will push the Linux image directly into the CRX instance. Since it is pretty much concentrated down from a normal VM, most of the other features have been removed and you can launch it in less than a second.

CRX instances have a CRX init process which provides the endpoint with communication with ESXi and allows the environment running inside of the CRX instance to be managed

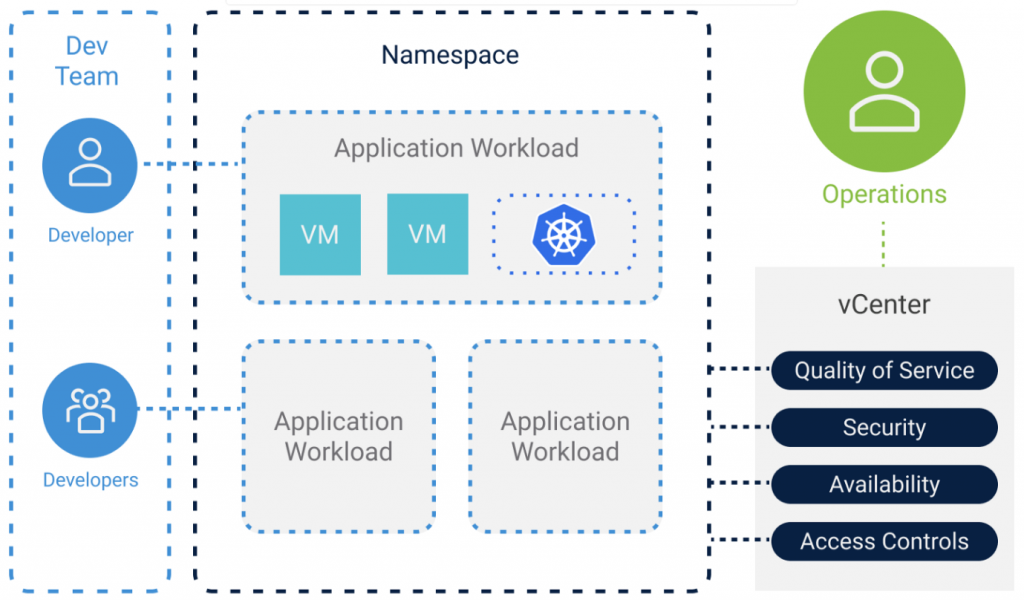

Namespaces

A Namespace in the Kubernetes cluster includes a collection of different objects like CRX VMs or VMX VMs. Namespaces are commonly used to provide multi-tenancy across applications or users, and to manage resource quotas

Guest Kubernetes Clusters

It is important to understand that the Supervisor Cluster itself does not deliver regular Kubernetes based clusters. The supervisor Kubernetes cluster is a specific implementation of Kubernetes for vSphere which is not fully conformant with upstream Kubernetes. If you want general purpose Kubernetes workloads, you have to use Guest Clusters. Guest Clusters in vSphere use the open source Cluster API project to lifecycle manage Kubernetes clusters, which in turn uses the VM operator to manage the VMs that make up a guest.

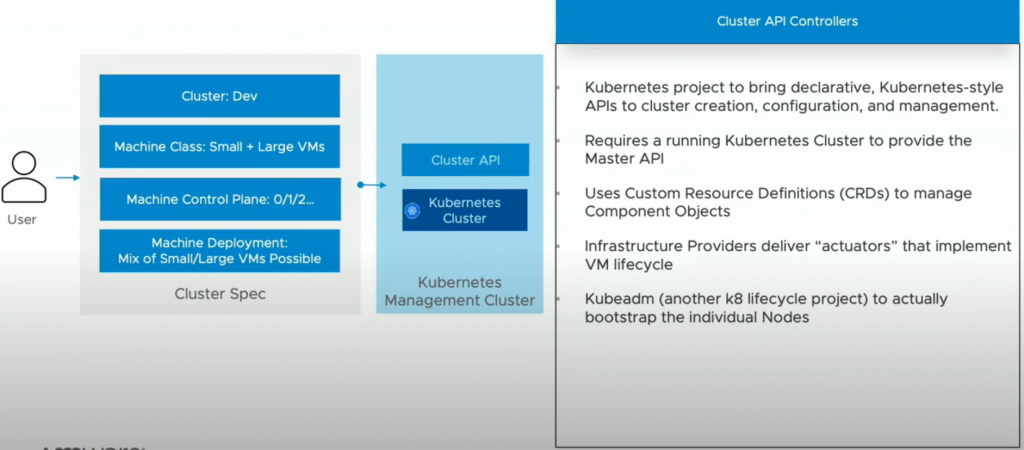

What is Cluster API?

This is an Open source project for managing the lifecycle of a Kubernetes cluster using Kubernetes itself. You start with the management cluster which gives you an API with custom resources or operators.