The VMKernel CPU scheduler is crucial to providing good performance in a consolidated environment. Most processors these days are equipped with multiple cores per processor and controlling, managing and scheduling these multi way processors is essential. It assigns execution contexts to processors

The CPU Scheduler

The CPU Scheduler has the following features

- Schedules the vCPUs on physical CPUs

- Enforces the proportional-share algorithm for CPU usage

- Supports SMP VMs

- Uses relaxed co-scheduling for SMP VMs

- Uses NUMA

- Processor Topology/Cache aware

- Hyperthreading

Schedules the vCPUs on physical CPUs

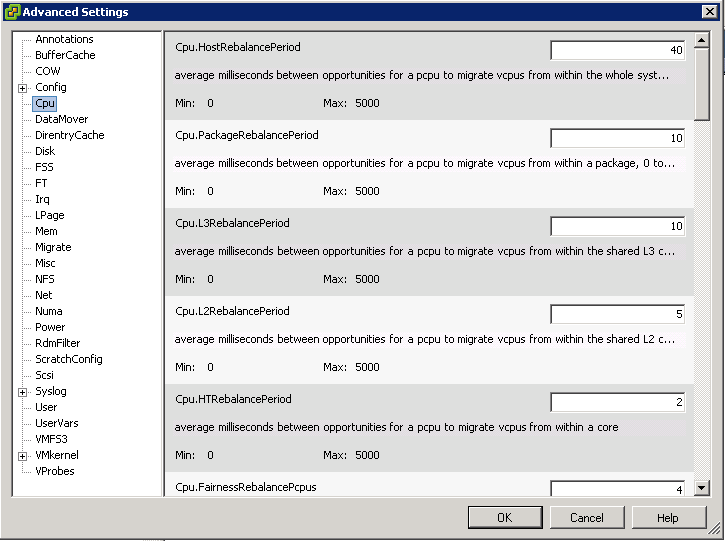

The Scheduler checks physical utilisation every 2-40ms and migrates vCPUs as necessary

Enforces the proportional-share algorithm for CPU usage

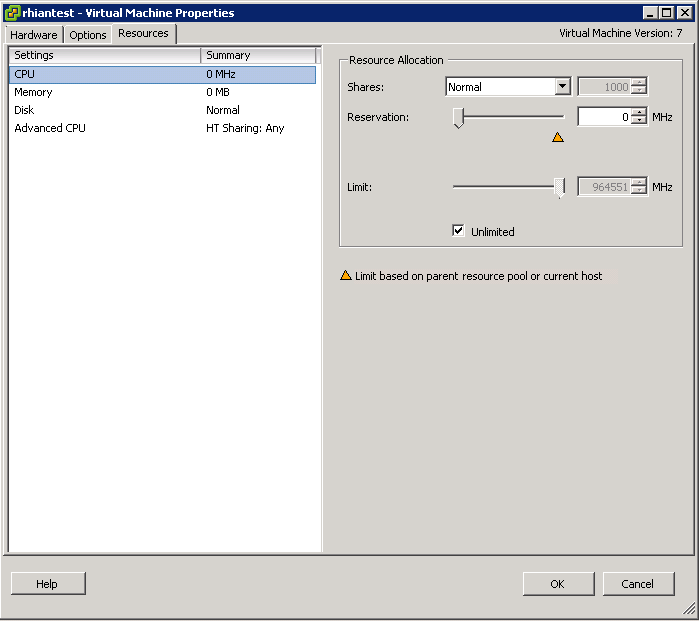

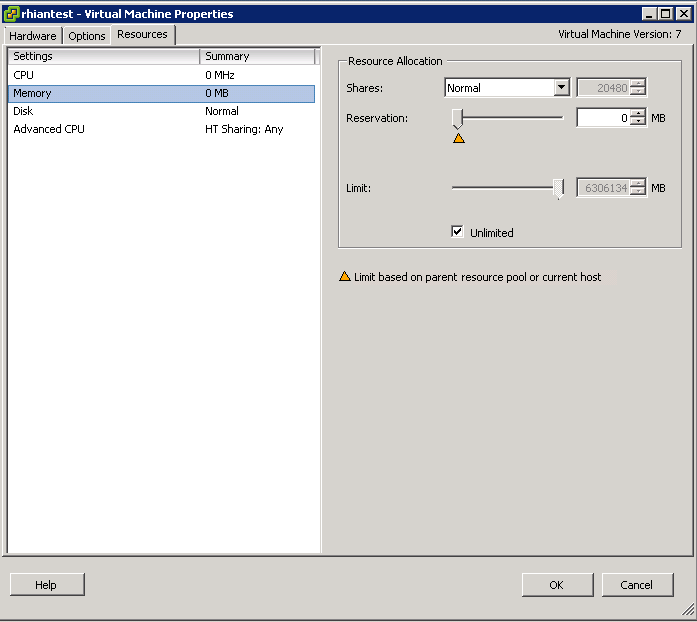

When CPUs are over-committed, hosts time slice physical CPUs across all VMs where each CPU is also prioritised by resource allocation settings in terms of Shares, Reservations and Limits)

Supports SMP VMs

If a VM is configured with multiple processors then it believes that it is running on a dedicated physical multiprocessor. ESXi maintains this by using co-scheduling of the vCPUs.

Co-Scheduling is a technique for scheduling, descheduling, preempting and blocking transactions across multiple processors. Without it, vCPUs would be scheduled independently, breaking the guests assumption regarding uniform process.

The CPU Scheduler takes “Skew” into account when scheduling vCPUs. Skew is the difference in execution rates between 2 or more vCPUs in an SMP VM. The Scheduler maintains a fine grained cumulative skew value for each vCPU in a VM. Time spent in the hypervisor is excluded from the process as sometimes the operations do not benefit from being co-scheduled. The vCPU is considered to be skewed if its cumulative skew value exceeds a configurable threshold, usually a few seconds

Uses relaxed co-scheduling for SMP VMs

Relaxed Co-Scheduling refers to a technology where vCPUs have become skewed and must be co-started. When any vCPU is scheduled, it ensures that all other vCPUs that are behind will also be scheduled

The vCPUs that move too far forward are stopped and wait for the other VMs to catch up. An idle vCPU does not gather skew and is classed as if it was running normally

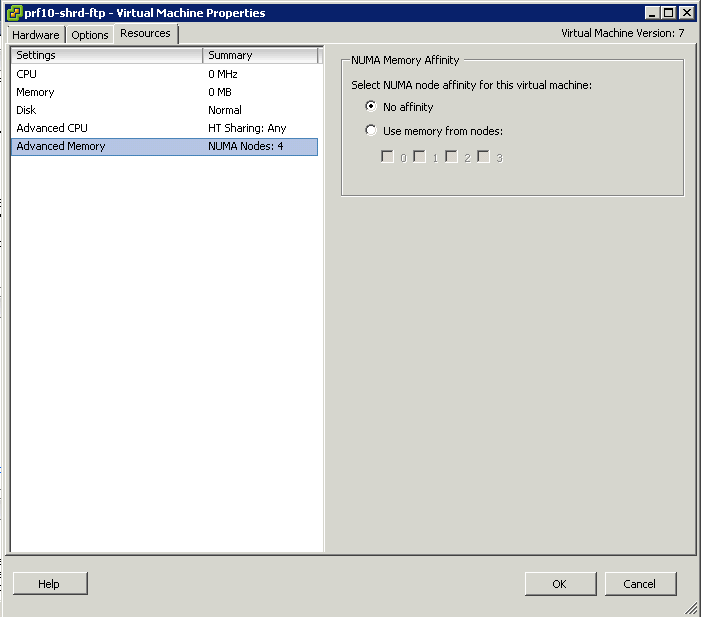

Uses NUMA

Please see this blog post for more information on NUMA

http://www.electricmonk.org.uk/2012/03/01/numa/

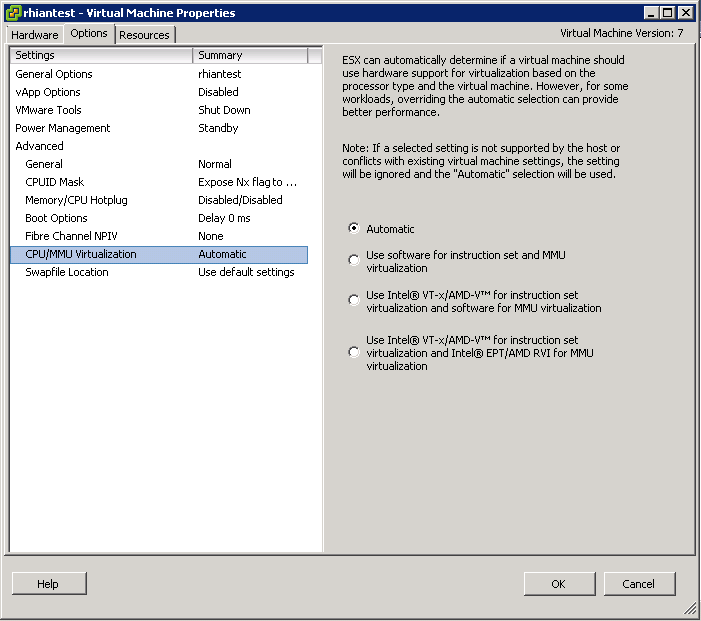

Processor Topology/Cache aware

Basically the CPU Scheduler uses Processor Topology information to calculate and optimise the placement of vCPUs on to different sockets using socket, core and logical processor information

The CPU Scheduler also takes advantage of the Shared Last Level Cache which exists within cores on the same processor. This is a memory cache that has a dedicated channel to a CPU socket bypassing the main memory bus which makes it run at the same speed of the CPU

In some situations the CPU scheduler will spread the load across all sockets and sometimes it can be beneficial to schedule all vCPus on to the same socket. Dependent on workload and over/under committed systems

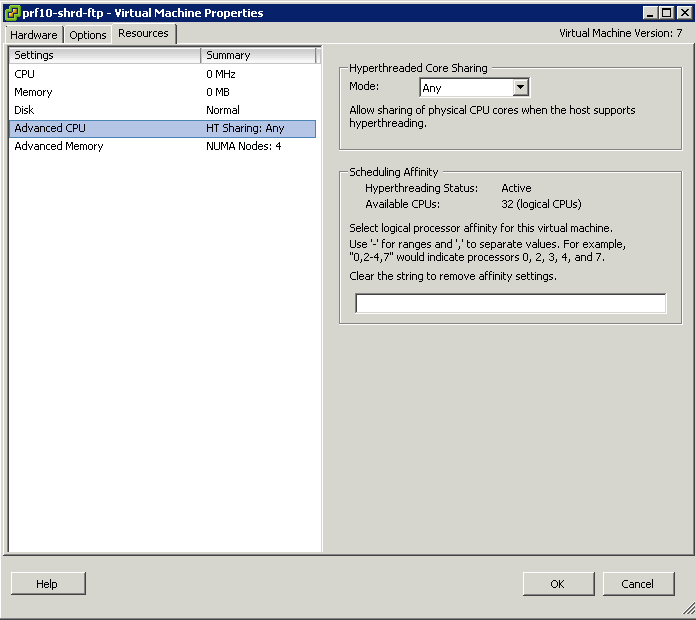

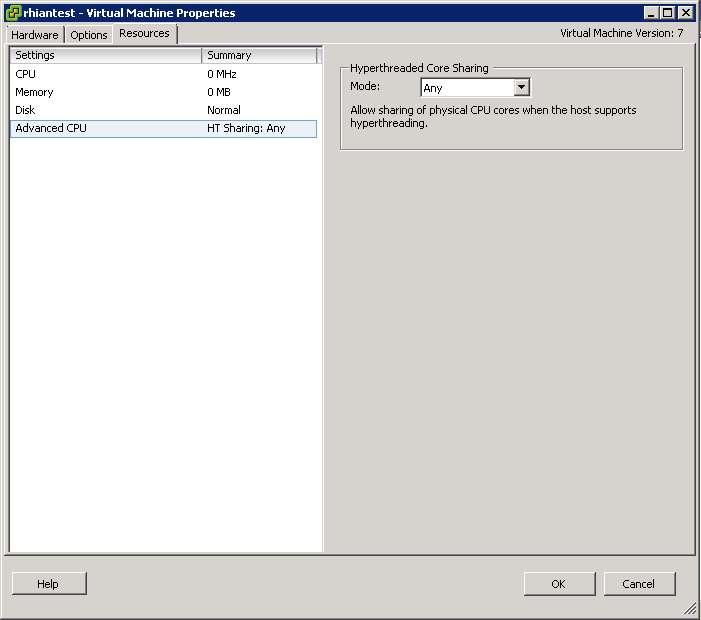

Hyperthreading

The applications most likely to benefit are 3D rendering programs, heavy-duty audio/video transcoding apps, and scientific applications built for maximum multi-threaded performance. But you may also enjoy a performance boost when encoding audio files in iTunes, playing 3D games and zipping/unzipping folders. The boost in performance can be up to 30%, although there will also be situations where Hyper-Threading provides no boost at all.

Hyper-Threading is where two threads are able to run on one single-threaded core. When a thread on the core in question is stalling or in a halt state, hyper-threading enables the core to work on a second thread instead. It makes the OS think that the processor has double the number of cores, and often yields a performance improvement