There are five of these FSMO roles in every forest. They are:

- Schema Master

- Domain Naming Master

- Infrastructure Master

- Relative ID (RID) Master

- Primary Domain Controller (PDC) Emulator

Two of them are only assigned once in the forest, in the domain at the forest root.

- Schema Master

- Domain Naming Master

Three of those FSMO roles are needed once in every domain in the forest:

- Infrastructure Master

- Relative ID (RID) Master

- Primary Domain Controller (PDC) Emulator

Schema Master

Whenever the schema is modified at all, those updates are always completed by the domain controller with the schema master role. Schema is updated during the normal replication, and the schema updates are replicated throughout all the domains in the forest. It’s advisable to place the schema master role on the same domain controller (DC) as the primary domain controller (PDC) emulator.

Domain Naming Master

This role is not used very often, only when you add/remove any domain controllers. This role ensures that there is a unique name of domain controllers in environments as domains join or leave the forest, the domain naming master makes the updates into active directory. Only this DC actually commits those changes into the directory. The domain naming master also commits the changes to application partitions.

Infrastructure Master

This role checks domain for changes to any objects. If any changes are found then it will replicate to another domain controller. The infrastructure master is a translator, between globally unique identifiers (GUIDs), security identifiers (SIDs), and distinguished names (DNs) for foreign domain objects. If you’ve ever looked at group memberships of a domain local group which has members from other domains, you can sometimes see those users and groups from the other domain listed only by their SID. The infrastructure master of the domain of which those accounts are in is responsible for translating those from a SID into their name

Usually, you do not put the infrastructure master role on a domain that holds the global catalog. However, if you’re in a single domain forest, the infrastructure master has no work to do, since there is no translation of foreign principals

Relative ID (RID) Master

This role is responsible for making sure each security principle has a different identifier. The relative ID master, or RID master, hands out batches of relative IDs to individual domain controllers, then each domain controller can use their allotment to create new users, groups, and computers. When domain controllers need more relative IDs in reserve, they request them from, and are assigned by, the domain controller with the RID master FSMO role.

It is recommended that the RID master FSMO role be assigned to whichever domain controller has the PDC emulator FSMO role

PDC Emulator

The domain controller that has the PDC emulator FSMO role assigned to it has many duties and responsibilities in the domain. For example, the DC with the PDC emulator role is the DC that updates passwords for users and computers. When a user attempts to login, and enters a bad password, it’s the DC with the PDC emulator FSMO role that is consulted to determine if the password has been changed without the replica DC’s knowledge. The PDC emulator is also the default domain controller for many administrative tools, and is likewise the default DC used when Group Policies are updated.

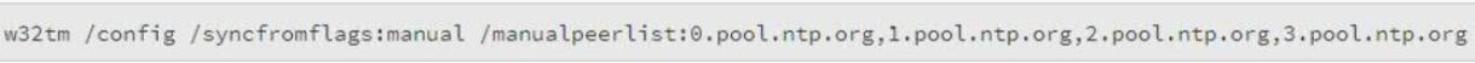

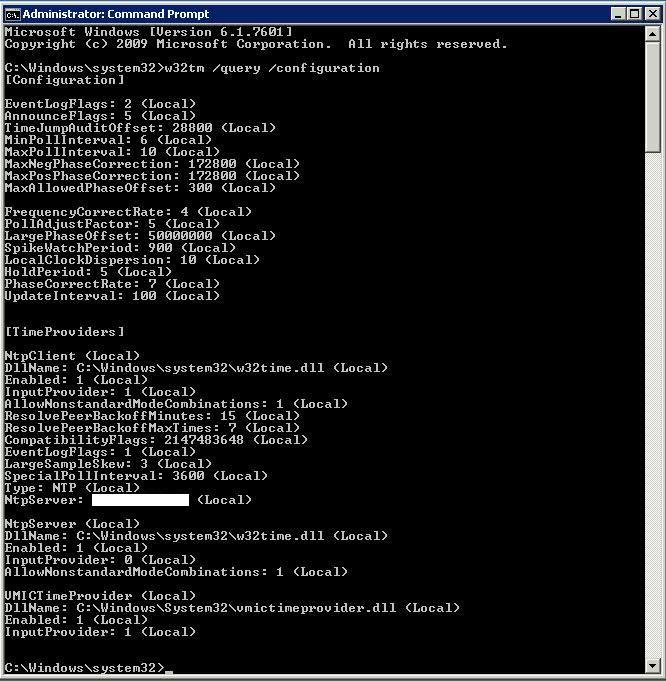

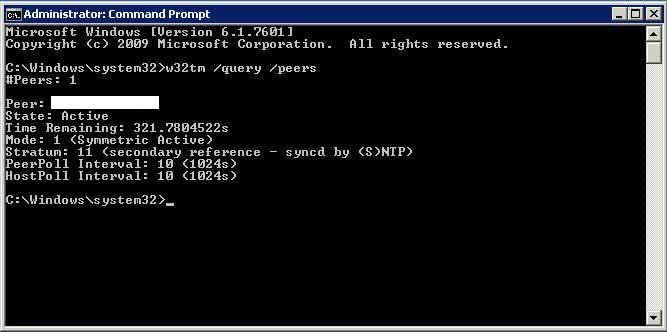

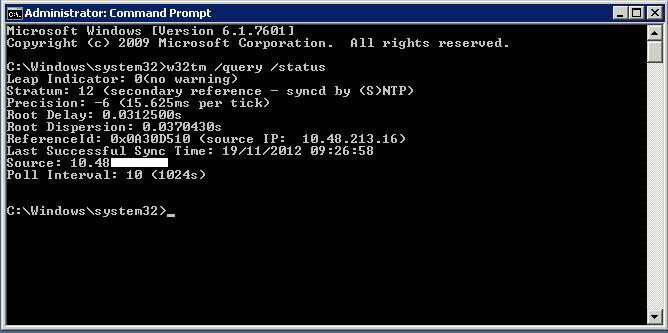

Additionally, it’s the PDC emulator which maintains the accurate time that the domain is regulated by. It’s the time on the PDC emulator which identifies when the last write time for an object was (to resolve conflicts, for example.) If it’s a forest with multiple domains, then the forest root PDC is the authoritative time source for all domains in the forest.

Each domain in the forest needs its own PDC emulator. Ideally, you put the PDC emulator on the domain controller with the best hardware available.

Seizing of Roles

In case of failures of any server you need to seize the roles. Administrators should use extreme caution in seizing FSMO roles. This operation, in most cases, should be performed only if the original FSMO role owner will not be brought back into the environment.

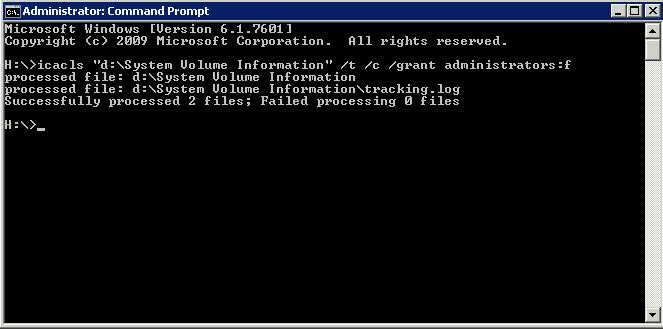

It is recommended that you log on to the domain controller that you are assigning FSMO roles to. The logged-on user should be a member of the Enterprise Administrators group to transfer schema or domain naming master roles, or a member of the Domain Administrators group of the domain where the PDC emulator, RID master and the Infrastructure master roles are being transferred.

For Schema Master:

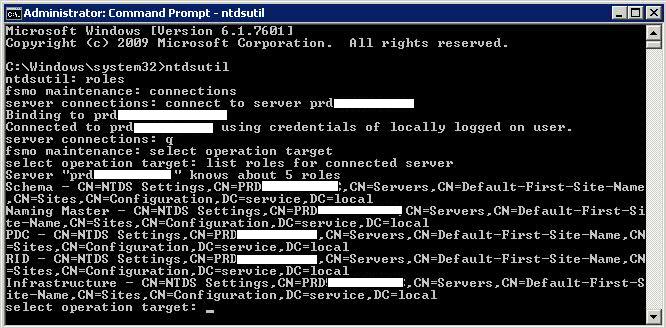

- Go to cmd prompt and type ntdsutil

- Type roles and press enter to enter fsmo maintenance.

- To see a list of available commands at any one of the prompts in the Ntdsutil utility, type ? and then press Enter

- Type connections to enter server connections.

- Type connect to server “Servername” and then press ENTER, where “Servername” is the name of the domain controller you want to assign the FSMO role to

- Type quit

- Type seize schema master. For a list of roles that you can seize, type ? at the fsmo maintenance prompt, and then press ENTER, or see the list of roles at the start of this article

After you have seized the role, type quit to exit NTDSUtil.

For Domain Naming Master:

Go to cmd prompt and type ntdsutil

- Go to cmd prompt and type ntdsutil

- Type roles and press enter to enter fsmo maintenance.

- To see a list of available commands at any one of the prompts in the Ntdsutil utility, type ? and then press Enter

- Type connections to enter server connections.

- Type connect to server “Servername” and then press ENTER, where “Servername” is the name of the domain controller you want to assign the FSMO role to

- Type quit

- Type seize domain naming master.

After you have Seize the role, type quit to exit NTDSUtil.

For Infrastructure Master Role:

Go to cmd prompt and type ntdsutil

- Go to cmd prompt and type ntdsutil

- Type roles and press enter to enter fsmo maintenance.

- To see a list of available commands at any one of the prompts in the Ntdsutil utility, type ? and then press Enter

- Type connections to enter server connections.

- Type connect to server “Servername” and then press ENTER, where “Servername” is the name of the domain controller you want to assign the FSMO role to

- Type quit

- Type seize infrastructure master.

After you have Seize the role, type quit to exit NTDSUtil.

For RID Master Role:

Go to cmd prompt and type ntdsutil

- Go to cmd prompt and type ntdsutil

- Type roles and press enter to enter fsmo maintenance.

- To see a list of available commands at any one of the prompts in the Ntdsutil utility, type ? and then press Enter

- Type connections to enter server connections.

- Type connect to server “Servername” and then press ENTER, where “Servername” is the name of the domain controller you want to assign the FSMO role to

- Type quit

- Type seize RID master.

After you have Seize the role, type quit to exit NTDSUtil.

For PDC Emulator Role:

Go to cmd prompt and type ntdsutil

- Go to cmd prompt and type ntdsutil

- Type roles and press enter to enter fsmo maintenance.

- To see a list of available commands at any one of the prompts in the Ntdsutil utility, type ? and then press Enter

- Type connections to enter server connections.

- Type connect to server “Servername” and then press ENTER, where “Servername” is the name of the domain controller you want to assign the FSMO role to

- Type quit

- Type seize PDC.

After you have Seize the role, type quit to exit NTDSUtil

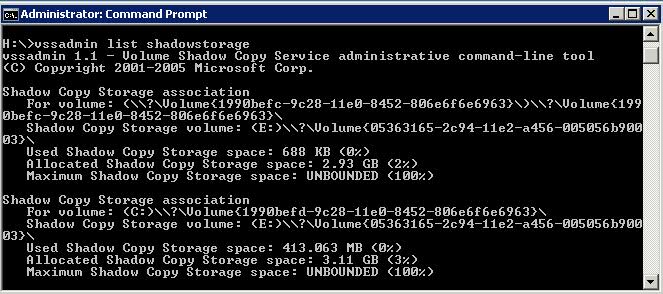

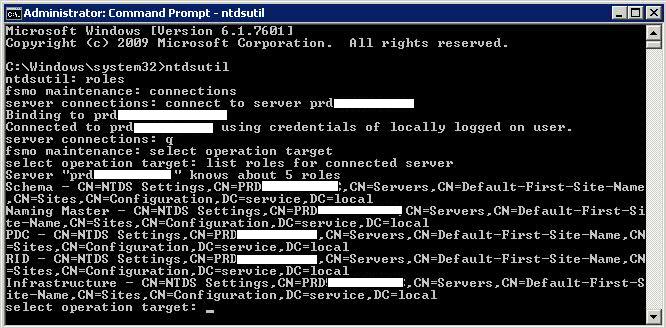

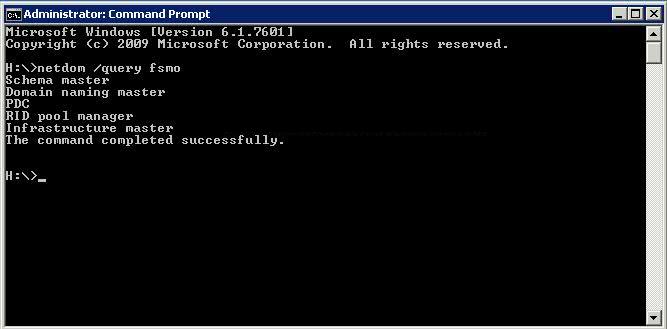

How can I determine who are the current FSMO Roles holders in my domain/forest?

- On any domain controller, click Start, click Run, type ntdsutil in the Open box, and then click OK.

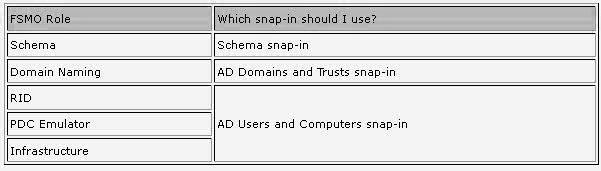

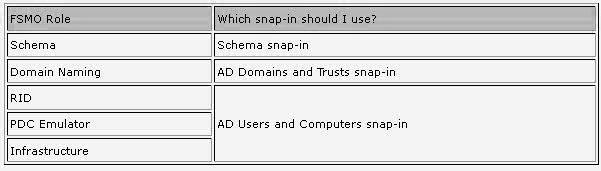

The FSMO role holders can be easily found by use of some of the AD snap-ins. Use this table to see which tool can be used for what FSMO role: