Tuning Configuration

- Minimum memory size is 4MB for virtual machines that use BIOS firmware. Virtual machines that use EFI firmware require at least 96MB of RAM or they cannot power on.

- The memory size must be a multiple of 4MB

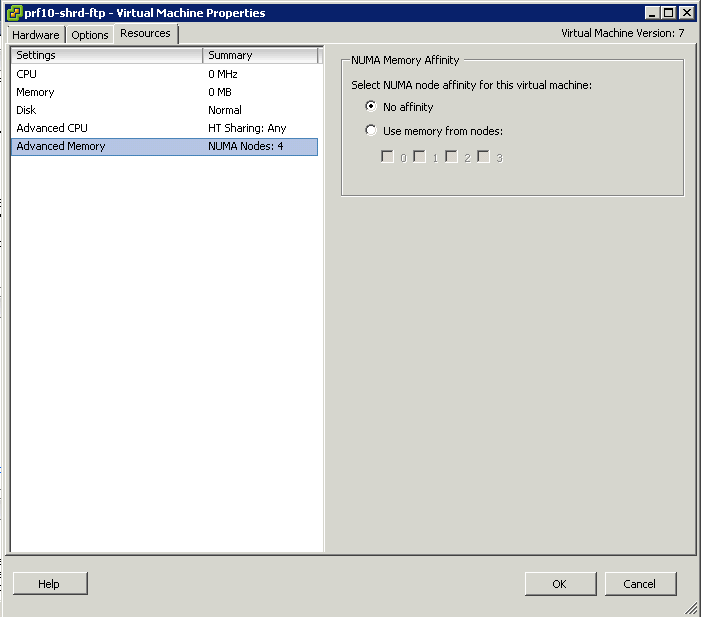

- vNUMA exposes NUMA technology to the Guest O/S. Hosts must have matching NUMA architecture and VMs must be running Hardware Version 8

- Size the VM so they align with physical boundaries. If you have a system with 6 cores per NUMA node then size your machines with a multiple of 6 vCPUs

- vNUMA can be enabled on smaller machines by adding numa.vcpu.maxPerVirtualNode=X (Where X is the number of vCPUs per vNUMA node)

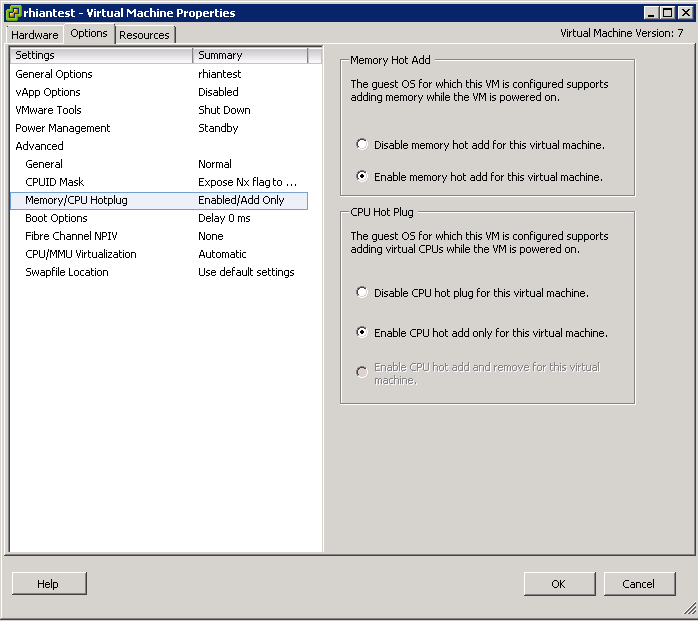

- Enable Memory Hot Add to be able to add memory to the VMs on the fly

- Use Operating Systems that support large memory pages as ESXi will by default provide them to those O/S’s which request them

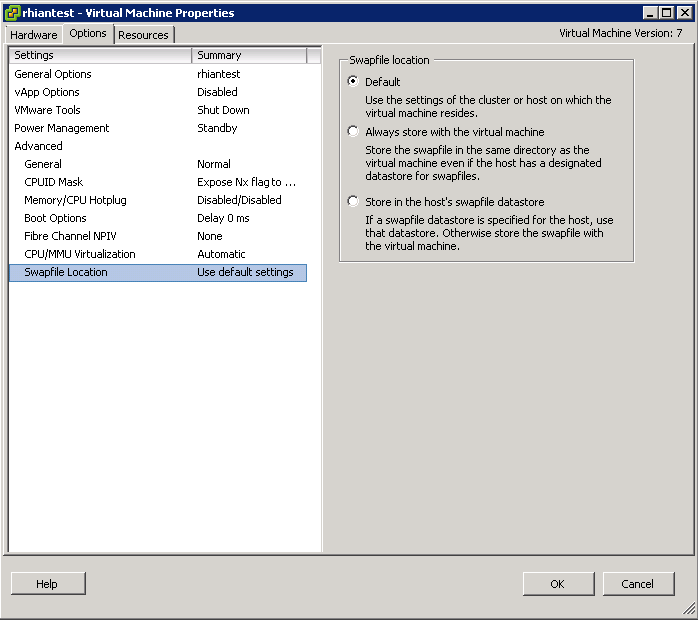

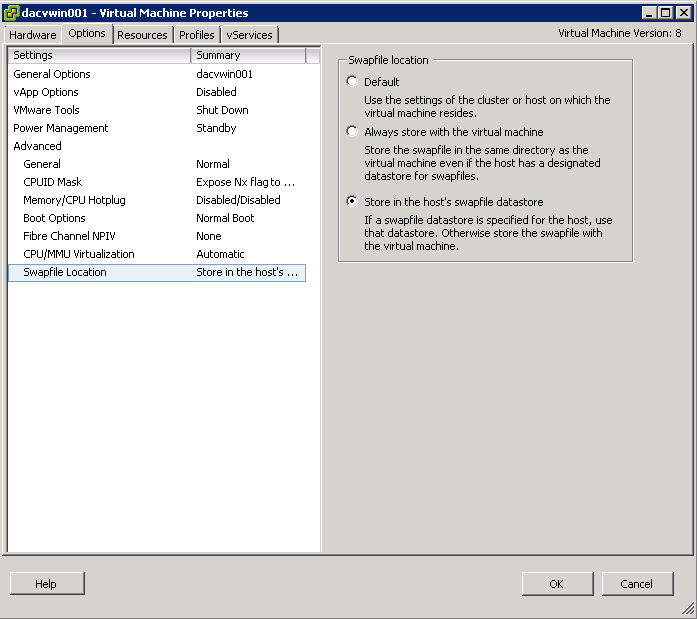

- Store a VMs swap file in a different faster location to the working directory

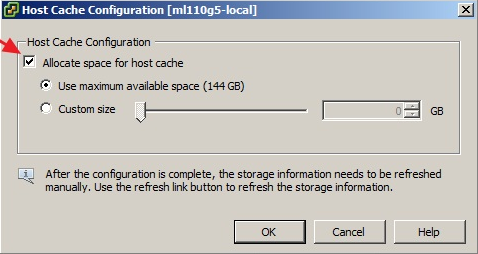

- Configure a special host cache on an SSD (If one is installed) to be used for the swap to host cache feature. Host cache is new in vSphere 5. If you have a datastore that lives on a SSD, you can designate space on that datastore as host cache. Host cache acts as a cache for all virtual machines on that particular host as a write-back storage for virtual machine swap files. What this means is that pages that need to be swapped to disk will swap to host cache first, and the written back to the particular swap file for that virtual machine

- Keep Virtual Machine Swap files on low latency, high bandwidth storage systems

- Do not store swap files on thin provisioned LUNs. This can cause swap file growth to fail.

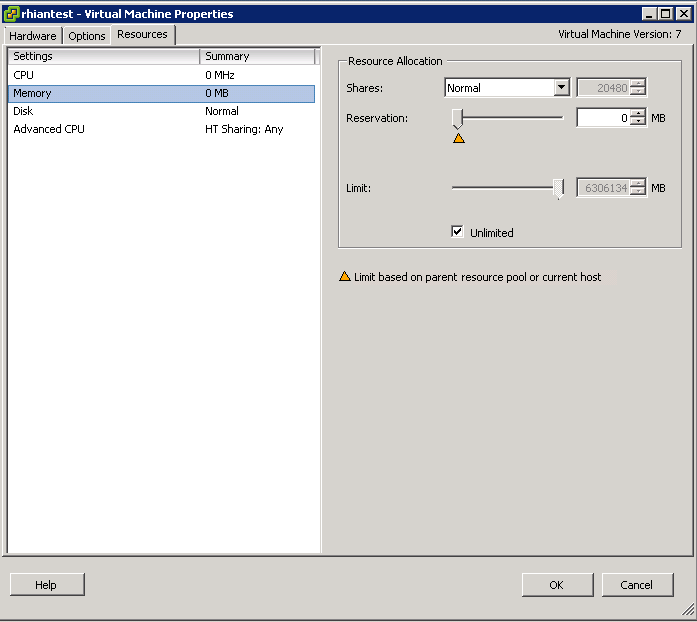

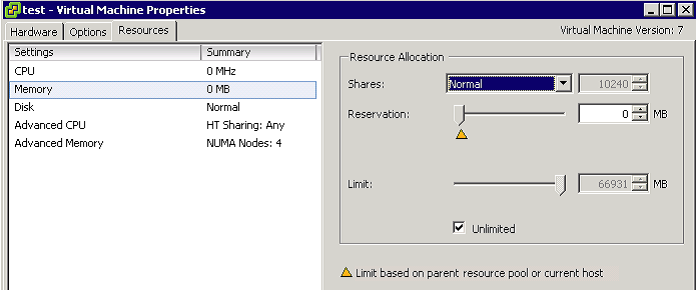

- You can use Limits, Reservations and Shares to control Resources per VM