The Task

For this task we had 6 x RHEL6 VMs which someone had asked us to attach the same RDM disk to in a non cluster aware scenario. E.g No SQL/Exchange clustering, just the simple sharing of a LUN between the VMs.

About RDM Mapping

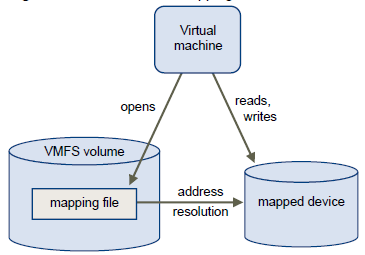

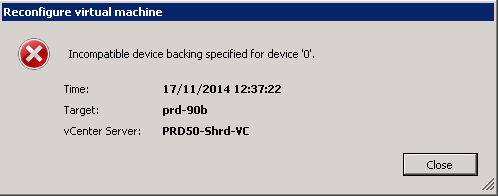

An RDM is a mapping file in a separate VMFS volume that acts as a proxy for a raw physical storage device.

The RDM allows a virtual machine to directly access and use the storage device. The RDM contains metadata for managing and redirecting disk access to the physical device.

The file gives you some of the advantages of direct access to a physical device while keeping some advantages of a virtual disk in VMFS. As a result, it merges VMFS manageability with raw device access. RDMs can be described in terms such as mapping a raw device into a datastore, mapping a system LUN, or mapping a disk file to a physical disk volume. All these terms refer to RDMs.

Although VMware recommends that you use VMFS datastores for most virtual disk storage, on certain occasions, you might need to use raw LUNs or logical disks located in a SAN.

When you give your virtual machine direct access to a raw SAN LUN, you create an RDM disk that resides on a VMFS datastore and points to the LUN. You can create the RDM as an initial disk for a new virtual machine or add it to an existing virtual machine. When creating the RDM, you specify the LUN to be mapped and the datastore on which to put the RDM.

Although the RDM disk file has the same.vmdk extension as a regular virtual disk file, the RDM contains only mapping information. The actual virtual disk data is stored directly on the LUN.

Compatibility Modes

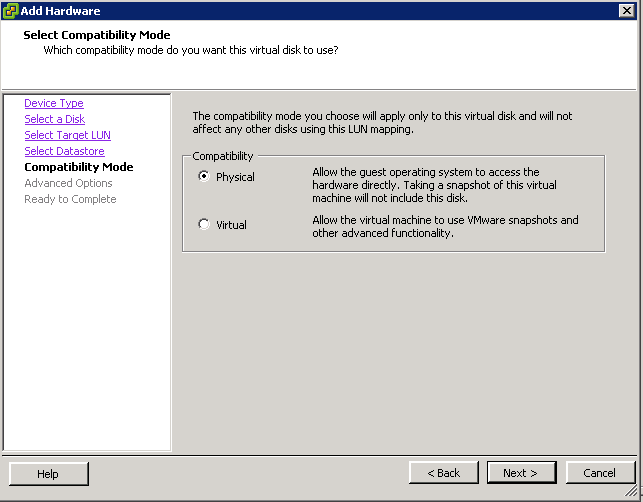

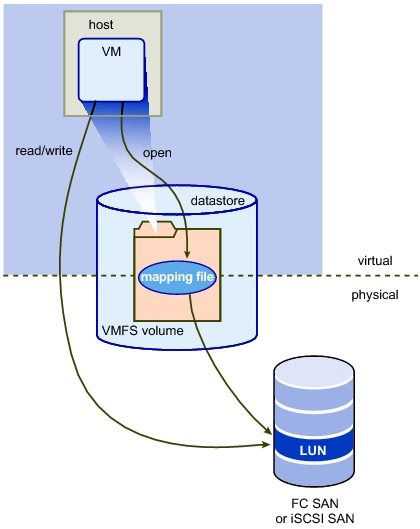

Two compatibility modes are available for RDMs:

- Virtual compatibility mode allows an RDM to act exactly like a virtual disk file, including the use of snapshots.

- Physical compatibility mode allows direct access of the SCSI device for those applications that need lower level control.

Instructions

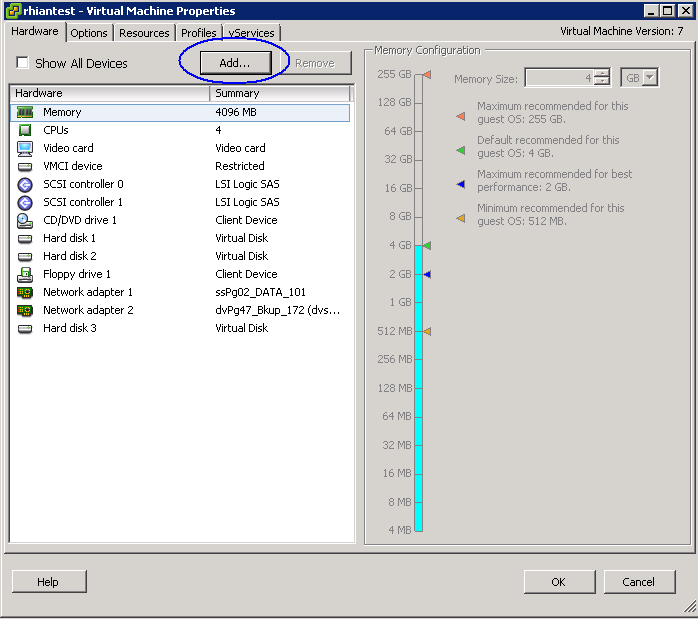

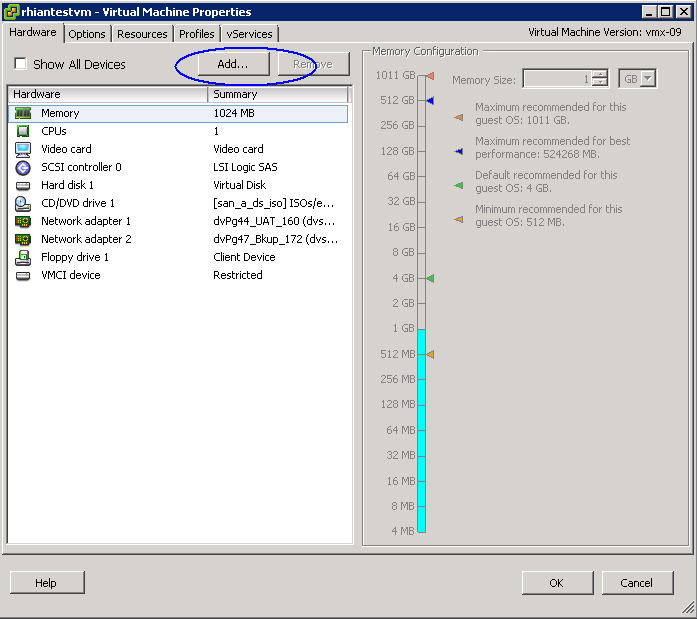

- Log into vCenter and go to the first VM and click Edit Settings. Note the VM will need to be powered off for you to configure some settings further on in the configuration.

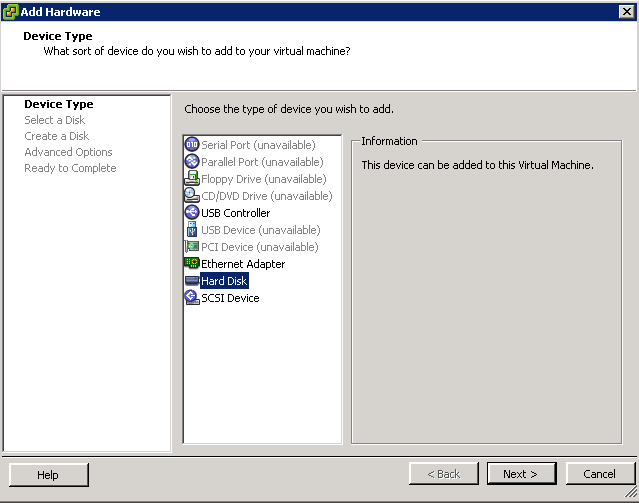

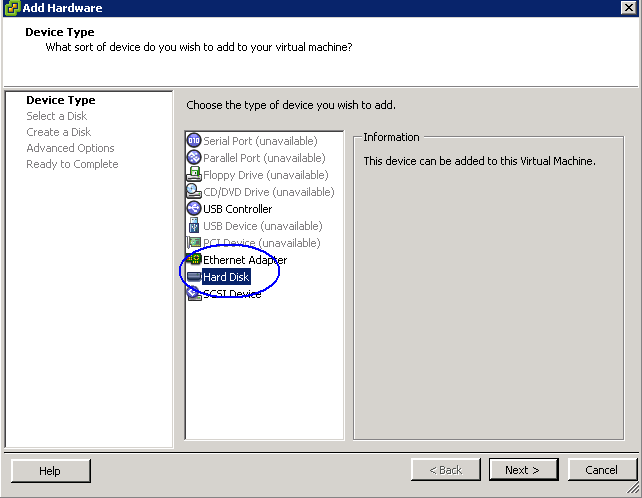

- Click Add and choose Hard Disk

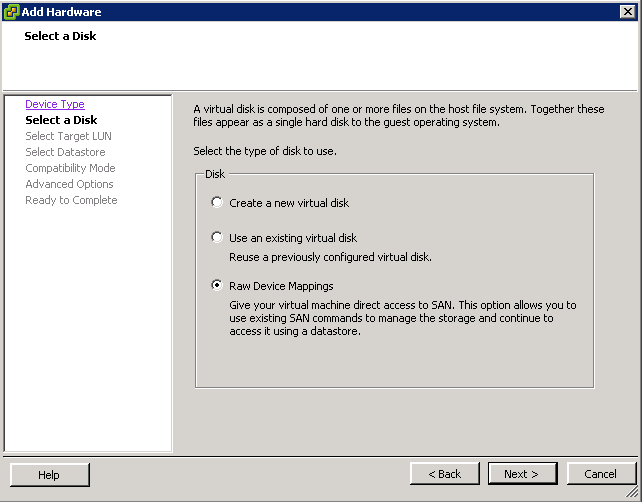

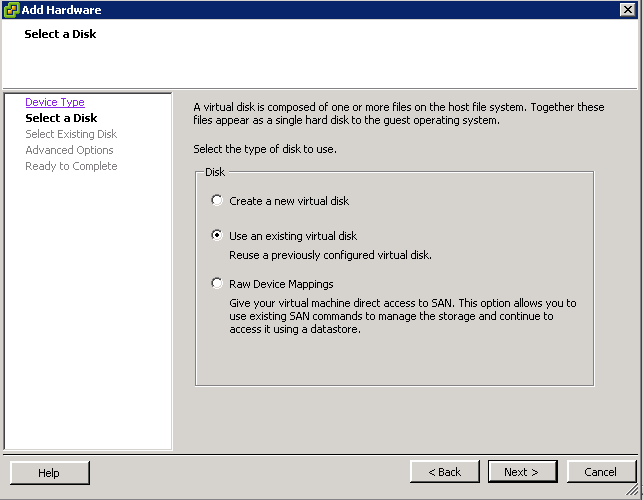

- Choose Raw Disk Mapping

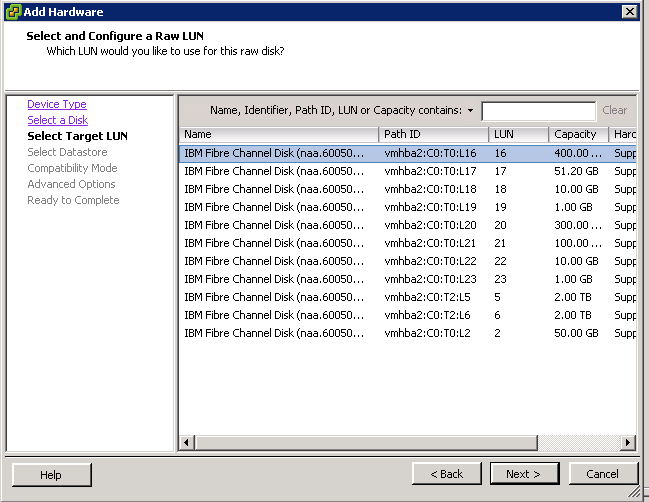

- Select the Raw Disk you want to use

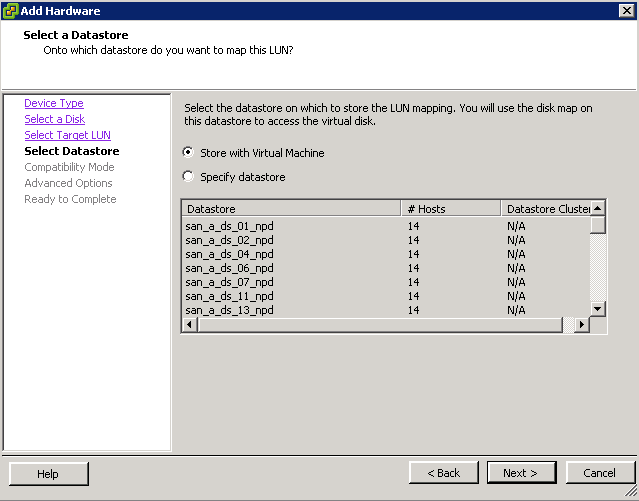

- Select whether to store it with the VM or on a separate datastore

- Choose a Compatibility Mode – Physical or Virtual. We need to choose Physical

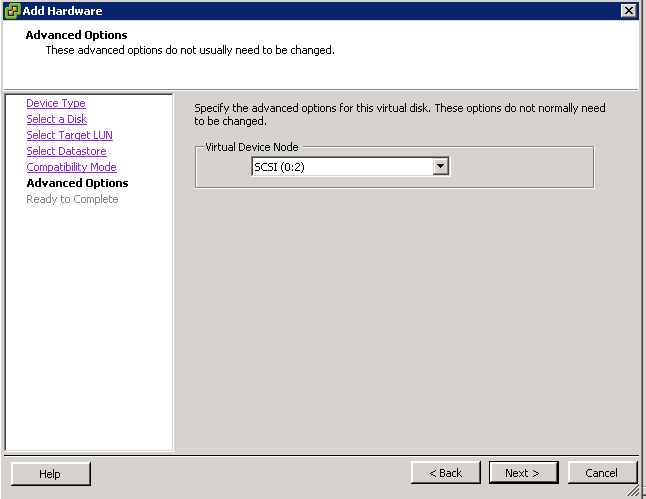

- Choose a SCSI Device Mode. This will also need to be the same on the second machine you are going to add the same RDM to.

- Click Finish

- Next go the second VM and click Edit Settings and click Add

- Click

- Click Choose an Existing Disk

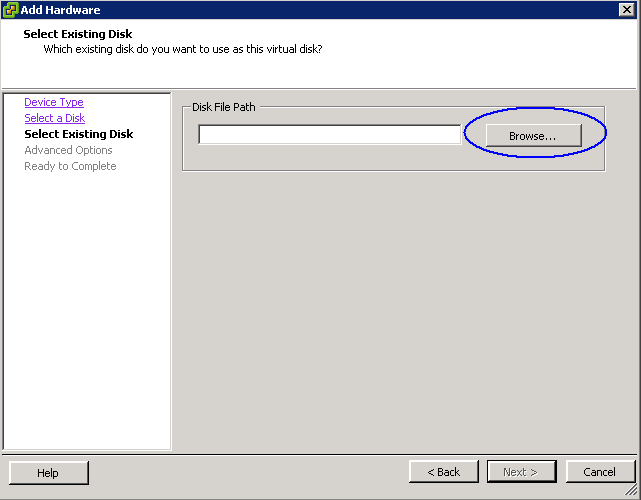

- You now need to browse to the Datastore that the first VM is one and find the RDM VMDK file and select this

- In Advanced Options, select the same SCSI ID that the first VM containing the RDM is on

- Click Finish and the Edit Settings box will come up again

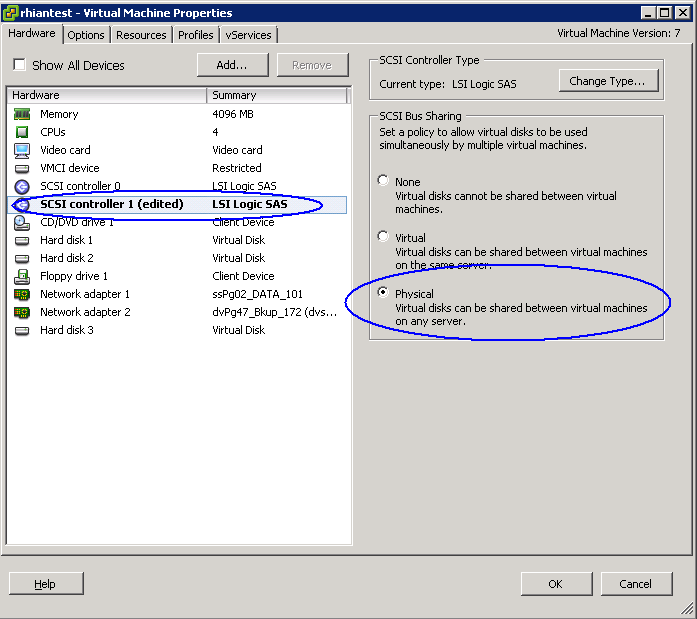

- You need to change the SCSI Bus Sharing on the Controller to Physical to Allow Sharing

- Click OK

- You should now have a shared RDM between 2 VMs

- Power on the VMs

Problems: Incompatible Device backing for device 0

We actually encountered an issue where we tried to accept the settings on the second VM and got the following error message

We resolved it by having a member of our storage team recreating the LUNS we needed to add on the SAN. When sharing MSCS RDM LUNs between nodes, ensure that the LUNs are uniformly presented across all ESXi/ESX hosts. Specifically, the LUN ID for each LUN must be the same for all hosts.

In our case with VMware and Windows clusters we use the IBM v7000 GUI to map the LUNs which is easier – It assigns the first available SCSI ID. No issues with these Operating Systems.

But with Red Hat it didn’t work, because it uses SCSI ID together with WWNs. So we had to use v7000 CLI to map the LUNs with one and the same SCSI ID to every host