Network Load Balancing is a feature of recent Microsoft server operating systems, including Windows 2000 Advanced Server, Windows Server 2003, and Windows Server 2008. This clustering technology enables you to improve the scalability and availability of Internet server programs, such as Web servers, proxy servers, DNS servers, FTP servers, virtual private network servers, streaming media servers, and terminal services servers.

In addition, it can detect host failures and automatically redistribute traffic to servers that are still operating.

In a VMware® Infrastructure 3 environment, you can create a cluster for Network Load Balancing using virtual machines on the same host or virtual machines on multiple hosts.

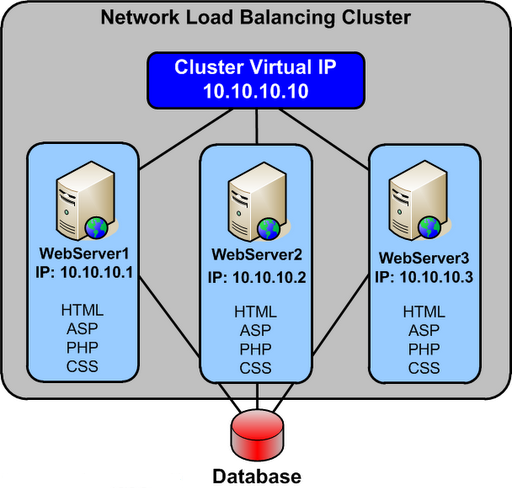

Network Load Balancing Basics

Network Load Balancing is implemented in a special driver installed on each Windows host in a cluster. The cluster presents a single IP address to clients. When client requests arrive, they go to all hosts in the cluster, and an algorithm implemented in the driver maps each request to a particular host. The other hosts in the cluster drop the request. You can set load partitioning to distribute specified percentages of client connections to particular hosts. You also have the option of routing all requests from a particular client to the host that handled that client’s first request.

Hosts in the cluster exchange heartbeat messages so they can maintain consistent information about what hosts are members of the cluster. If a host fails, client requests are rebalanced across the remaining hosts, with each remaining host handling a percentage of requests proportional to the percentage you specified in the initial configuration.

Planning a Network Load Balancing Cluster

Network Load Balancing relies on the fact that incoming packets are directed to all cluster hosts and passed to the Network Load Balancing driver for filtering.

You can configure a Network Load Balancing cluster in one of the following modes:

- Multicast

Multicast mode allows communication among hosts because it adds a Layer 2 multicast

address to the cluster instead of changing the cluster. Communication among hosts is possible because the hosts retain their original unique media access control (MAC) addresses and already have unique, dedicated IP addresses. However, the address resolution protocol (ARP) reply that is sent by a host in the cluster (in response to an ARP request) maps the cluster’s unicast IP address to its multicast MAC address.

Some routers do not support the resolution of unicast IP addresses to multicast MAC addresses, and they discard the ARP reply. As a result, an administrator must add a static ARP entry in the router, mapping the cluster IP address to its MAC address.

NOTE VMware recommends that you use multicast mode, because unicast mode forces the physical switches on the LAN to broadcast all Network Load Balancing traffic to every machine on the LAN.

- Unicast

Unicast mode works seamlessly with all routers and Layer 2 switches. However, this mode induces switch flooding, a condition in which all switch ports are flooded with NLB Traffic, even ports to which servers which not involved in NLB are attached. To communicate among hosts, you must have a second virtual adapter for each host.

Normally, switched environments avoid port flooding when a switch learns the MAC addresses of the hosts that are sending network traffic through it. The Network Load Balancing cluster masks the cluster’s MAC address for all outgoing traffic to prevent the switch from learning the MAC address.

On an ESX host, the VMkernel sends a reverse address resolution protocol (RARP) packet each time certain actions occur—for example, when a virtual machine is powered on, when there is a teaming failover, or when certain VMotion operations occur. The RARP packet gives physical switches the MAC address of the virtual machine involved in the action. In a Network Load Balancing cluster environment, after a Network Load Balancing node is powered on, the notification in the RARP packet exposes the MAC address of the cluster NIC. As a result, switches might begin to send all inbound traffic destined for the Network Load Balancing cluster through one switch port to a single node of the cluster.

Because the virtual switch operates with complete data about the underlying MAC addresses of the virtual NICs inside each virtual machine, it always correctly forwards packets containing a MAC address matching that of a running virtual machine. As a result of this behavior, the virtual switch does not forward traffic destined for the Network Load Balancing MAC address outside the virtual environment into the physical network, because it is able to forward it to a local virtual machine

Configuring Network Load Balancing in Windows

- Install a Windows operating system that supports Network Load Balancing in your virtual machines.

- You should install two virtual NICs in each virtual machine that will be part of the Network Load Balancing cluster. One virtual NIC from each virtual machine is used for Network Load Balancing. The other virtual NIC is used for management of the Windows virtual machine.

- Each Network Load Balancing node requires a static IP address to be assigned to the Network Load Balancing‐bound adapter. You need one or more additional static IP addresses to be used as the virtual IP addresses of the Network Load Balancing cluster. Use IP addresses that belong to the same subnet.

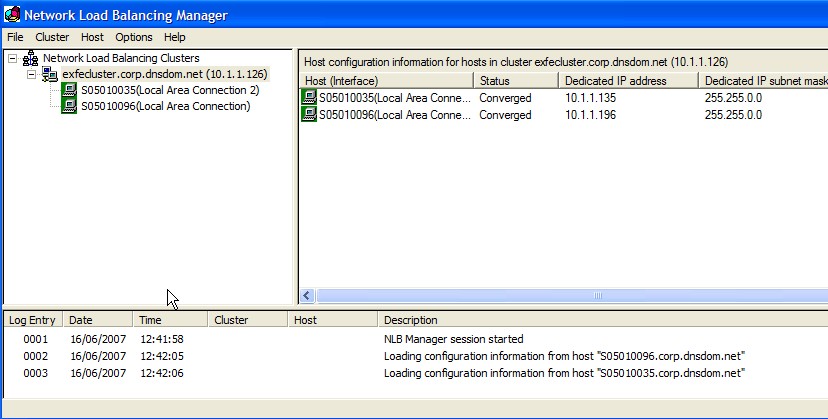

- Configure Network Load Balancing options using one of the following: Network Load Balancing Manager or Network Load Balancing Properties dialog box accessed through Network Connections

- VMware recommends that you use the Network Load Balancing Manager.

Using both Network Load Balancing Manager and Network Connections to change Network Load Balancing properties can lead to unpredictable results.

You can use Network Load Balancing Manager from inside a node or from a remote machine that can communicate with all nodes. By default, Network Load Balancing Manager is installed on Windows Server 2003, and you can access it by clicking Settings > Control Panel > Administrative Tools > Network Load Balancing Manager.

Configuring Multicast Mode on VMware Switches

You do not need to take any special steps to configure your ESX host when you are using multicast mode.

Configuring Unicast Mode VMware Switches

This procedure helps prevent RARP packet transmission for the virtual switch as a whole. This setting affects all the port groups that use the switch. To prevent RARP Packet Transmission for a Virtual Switch, do the following

- Log on to the VI Client and select the ESX host.

- Click the Configuration tab.

- Choose Networking and, for the virtual switch, select Properties.

- On the Ports tab, select the virtual switch and click Edit.

- Click the NIC Teaming tab, set Notify Switches to No.

- Click OK and close the vSwitch Properties dialog box

Complete the following steps to prevent RARP packet transmission only for an individual port group. This setting overrides the setting you make for the virtual switch.

- Log on to the VI Client and select the ESX host.

- Click the Configuration tab.

- Choose Networking and, for the virtual switch, select Properties

- On the Ports tab, select the port group and click Edit.

- Click the NIC Teaming tab, set Notify Switches to No.

- Click OK and close the vSwitch Properties dialog box.

- Click OK and close the vSwitch Properties dialog box.

What happens to the Packets?

- The packets/connections simply reach the network adaptor of each machine in the cluster

- The NLB module sits right on top of the adaptor driver and receives the packet

- Based on the source ip address of the client and/OR the port number of the client, an algorithm in the NLB module of each server in the cluster decides whether they should pickup this connection or not

- The algorithm guarantees that only one machine in the cluster will pick up the packet. Think of this as an agree-upon horizontal partitioning strategy. For instance a trivial implementation could be, that in a two machine cluster – machine 1 picks up all connections with odd ip addresses and machine 2 picks up all connections with even IP addresses. This strategy is fool proof and does not require any communication between the machines at all. The actual algorithm is a randomization algorithm that uses more than just the client ip address (based on configuration) to determine whether it should pick up that packet

- If the NLB module figures that this packet should be picked up by it, it simply passes it through the stack upwards. If not it simply discards the packet at this stage itself

- NLB supports differential load balancing configurations wherein one can specify unequal distribution of load between machines in a cluster and its algorithm will automatically take care of it

- NLB also maintains a heartbeat between machines and if any machine is found to be down, it will automatically redistribute the load amongst the remaining machines

- NLB also supports client_affinity (another name for sticky sessions) where in connections originating from a single IP will be sent to a single source. This can screw up if one is using a reverse-proxy in front of the cluster, since all connections will appear to originate from a single ip address. One should not enable client_affinity if one has a reverse proxy. In most cases if you have a reverse-proxy, the reverse-proxy itself can perform relevant load balancing, so NLB would be redundant

As new machines join or are removed from the cluster, in order for the load to be distributed correctly among all active nodes, the algorithm must be re-executed in an extremely important re-evaluation process called convergence. It is also important to realize that at no time is NLB ever aware of what the load is on any particular cluster node because NLB cannot determine whether a node’s CPU usage is extremely high or that a node has little to no available memory to process a request

Advantages of NLB

- Network Load Balancing is very efficient and can provide a very big performance improvement for each machine added into the cluster.

- NLB has a fault tolerance capability. Many other load balancing implementations, such as Round Robin DNS (RRDNS), continue to send requests to servers that have “died” until system administrators pick up on the fact that there is a problem and then manually perform a configuration change. The key is redundancy in addition to load balancing; if any machine in the cluster goes down, NLB will re-balance the incoming requests to the still running servers thus handling scenarios where a power supply has burnt out, a network card has gone bad, the primary hard disk has crashed, etc

- This level of redundancy increasing the load balancing capability becomes simply a matter of adding machines to the cluster, which results in a practically unlimited application scalability.

- NLB works with any TCP or UDP application-based protocol. This means that it’s possible to configure a variety of NLB clusters within an organization, and each one can have its own specific function. For example, one cluster may be dedicated to handling all Internet-originated HTTP traffic while another may be used to serve all intranet requests. If the employees have a need for transferring files, there can be a FTP cluster acting as centralized file storage with closely monitored uploads and downloads

- By far, one of the biggest advantages of NLB is its ease of use. NLB installs only a networking driver component – absolutely no special hardware is required. Not only does this facilitate the deployment of a load balancing solution, but it also significantly reduces costs

References

- “Checklist: Enabling and configuring Network Load Balancing”

http://go.microsoft.com/fwlink/?LinkId=18371

- Reasons for using NLB

http://technet2.microsoft.com/windowsserver/en/library/7698646d‐510e‐47f9‐9b09‐b31dec12be3a1033.mspx

Leave a Reply