What is Fault Tolerance?

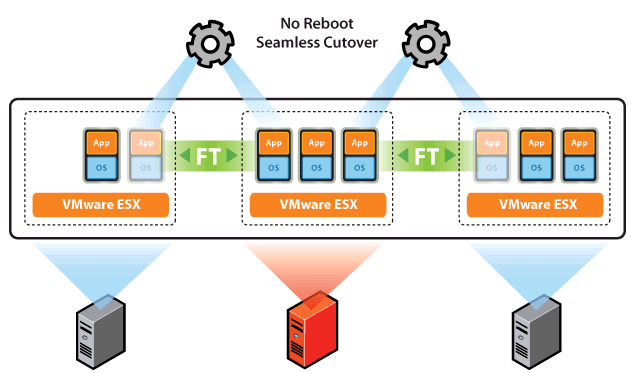

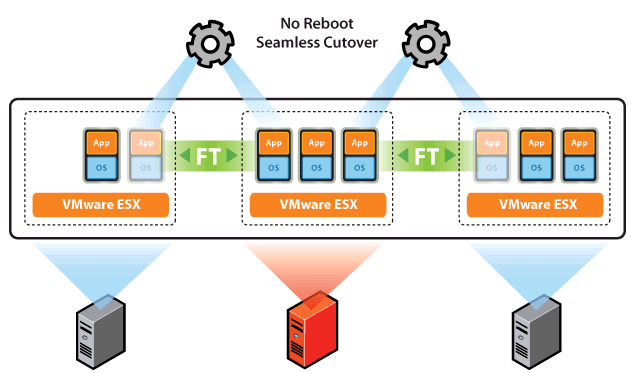

FT is the evolution of continuous availability that utilises VMware vLockstep technology to keep a primary and secondary virtual machine in sync. It is based on the record/playback technology used in VMware Workstation. It streams non-deterministic events and then replay will occur deterministically. This means it matches instruction for instruction and memory for memory to create identical processing

Deterministic means that the processor will execute the same instruction set on the secondary VM

Non-Deterministic means event functions such as network/disk/mouse and keyboard including hardware interrupts which are also played back

The Primary and Secondary VMs continuously exchange heartbeats. This exchange allows the virtual machine pair to monitor the status of one another to ensure that Fault Tolerance is continually maintained. A transparent failover occurs if the host running the Primary VM fails, in which case the Secondary VM is immediately activated to replace the Primary VM. A new Secondary VM is started and Fault Tolerance redundancy is reestablished within a few seconds. If the host running the Secondary VM fails, it is also immediately replaced. In either case, users experience no interruption in service and no loss of data

Fault Tolerance avoids “split-brain” situations, which can lead to two active copies of a virtual machine after recovery from a failure. Atomic file locking on shared storage is used to coordinate failover so that only one side continues running as the Primary VM and a new Secondary VM is respawned automatically.

Use Cases

- Applications that need to be available at all times, especially those that have long-lasting client connections that users want to maintain during hardware failure.

- Custom applications that have no other way of doing clustering.

- Cases where high availability might be provided through custom clustering solutions, which are too complicated to configure and maintain.

- On demand protection for VMs running end of month reports or financials

Best Practices for Fault Tolerance

To ensure optimal Fault Tolerance results, VMware recommends that you follow certain best practices. In addition to the following information, see the white paper VMware Fault Tolerance Recommendations and Considerations at http://www.vmware.com/resources/techresources/10040

Requirements for FT

- Cluster Requirements

- Host Requirements

- VM Requirements

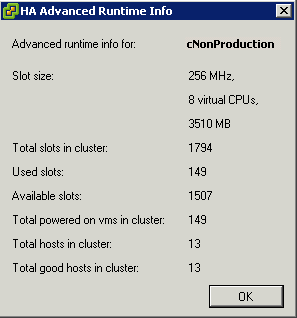

Cluster Requirements

- Host certificate checking must be enabled. Default for vSphere 4.1 but you may need to enable this (vCenter Server Settings > SSL Settings > Select the vCenter requires verified host SSL certificates)

- The cluster must have at least 2 ESXi hosts running the same FT Version or build number

- HA must be enabled on the cluster

- EVC must be enabled if you want to use FT in conjunction with DRS or DRS will be disabled

Hosts Requirements

- The ESXi hosts must have access to the same datastores and networks

- The ESXi hosts must have a FT Logging network setup

- The FT Logging network must have at least 1GB connectivity

- NICs can be shared if necessary

- The ESXi hosts CPUs must be FT compatible

- Host must be licensed for FT

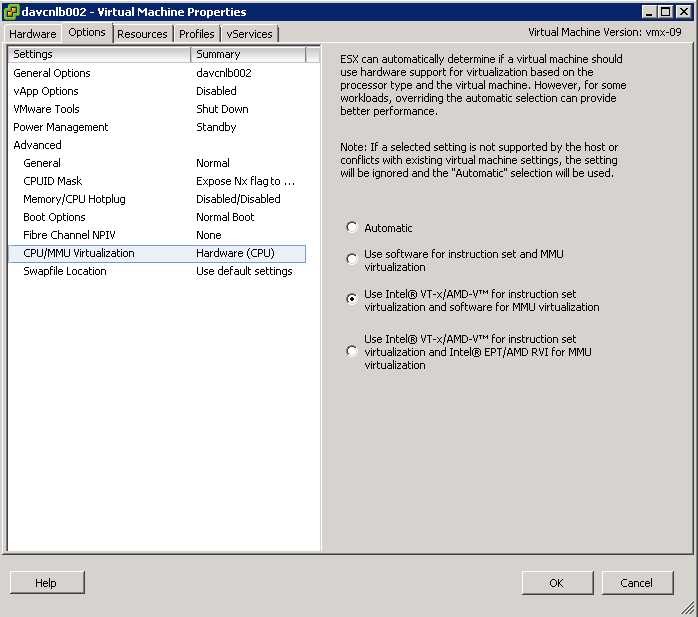

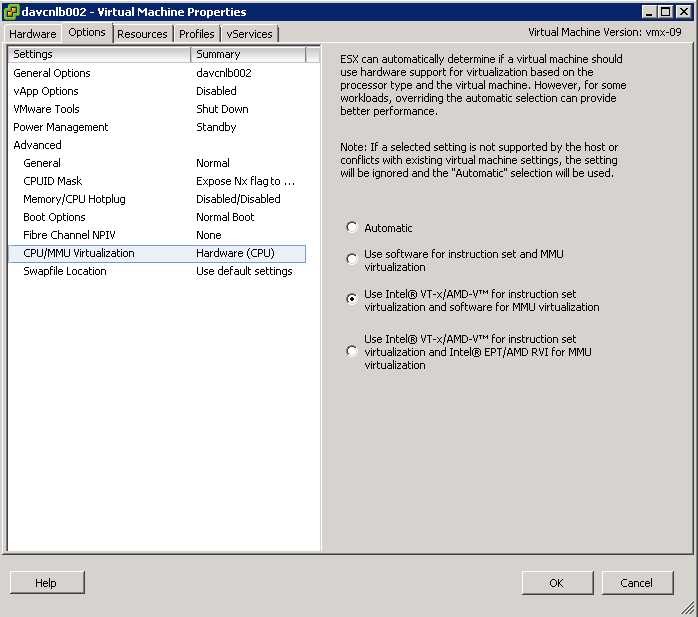

- Hardware Virtualisation must be enabled on the BIOS of the hosts to enable CPU support for FT

- It is recommended that Power Management is turned off in the BIOS. This helps ensure uniformity in the CPU speeds

VMs Requirements

- Only VMs with a single CPU are supported

- VMs must be running a supported O/S

- VMs must be stored on shared storage available to all hosts

- FC, iSCSI, FCOE and NFS are supported

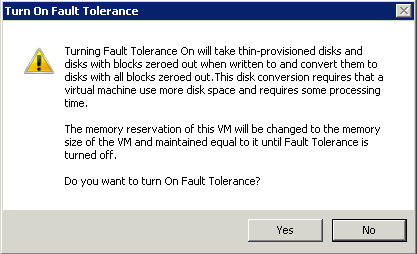

- A VMs disk must be eager zeroedthick format or a Virtual RDM (Physical RDMs are not supported)

- No VM snapshots

- The VM must not be a linked clone

- No USB, Sound devices, serial ports or parallel ports configured

- The VM cannot use NPIV

- Nested Page Tables/Extended Page Tables are not supported

- The VM cannot use NIC Passthrough

- The VM cannot use the older vlance drivers

- No CD-ROM or floppy devices attached

- The VM cannot use a paravirtualised kernel

- VMs must be on the correct Monitor Mode

Caveats

- You can use vMotion but not Storage vMotion and therefore Storage sDRS

- Hot Plugging is not allowed

- You cannot change the network settings while the VM is on

- Because snapshots are not supported, you will not be able to use any backup mechanism that uses snapshots. You can disable FT first before backing up

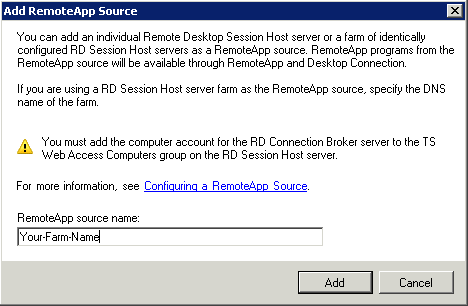

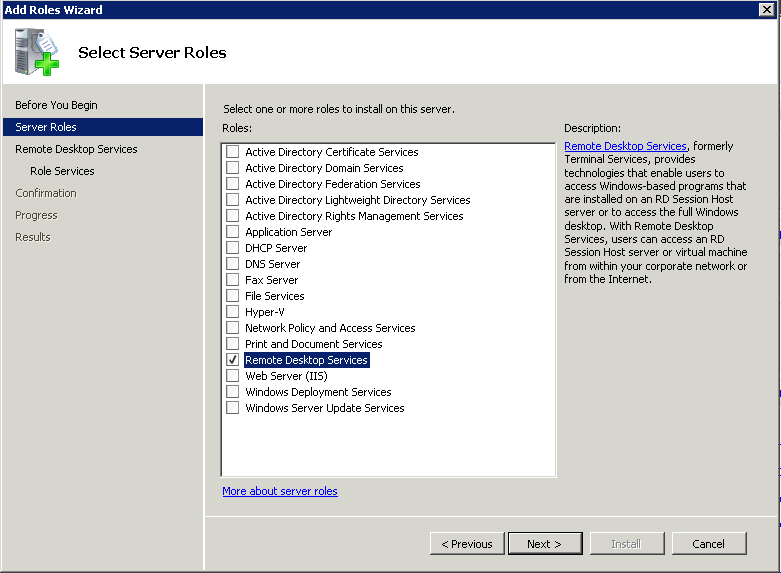

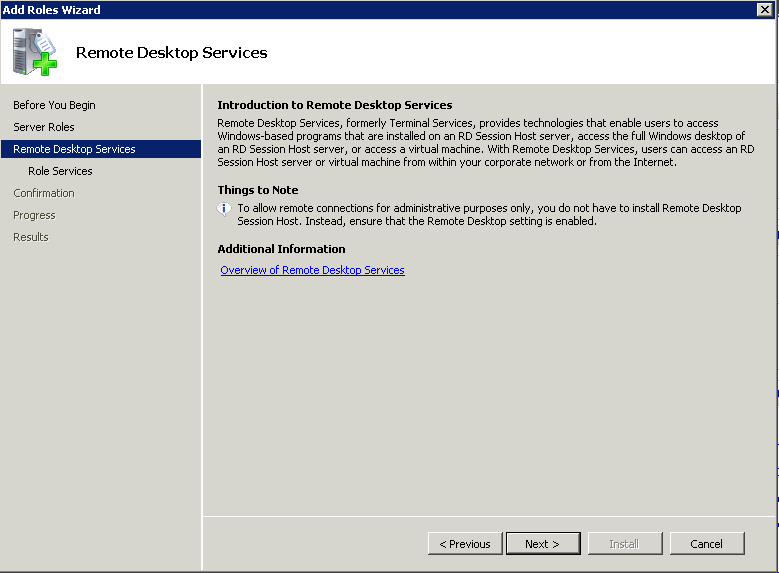

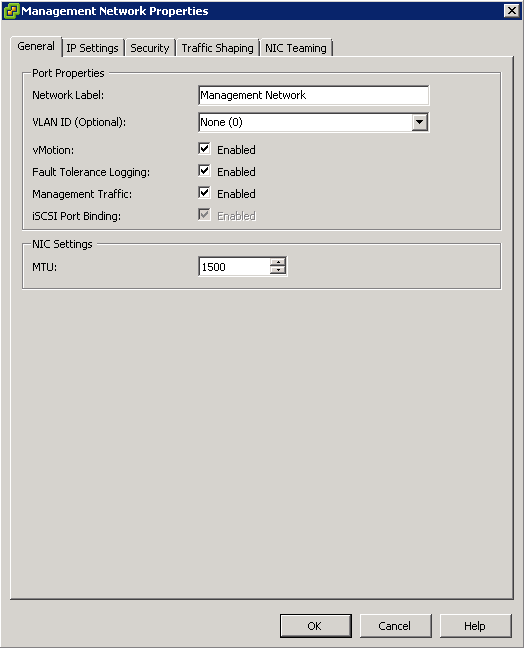

Configure FT Networking for Host Machines

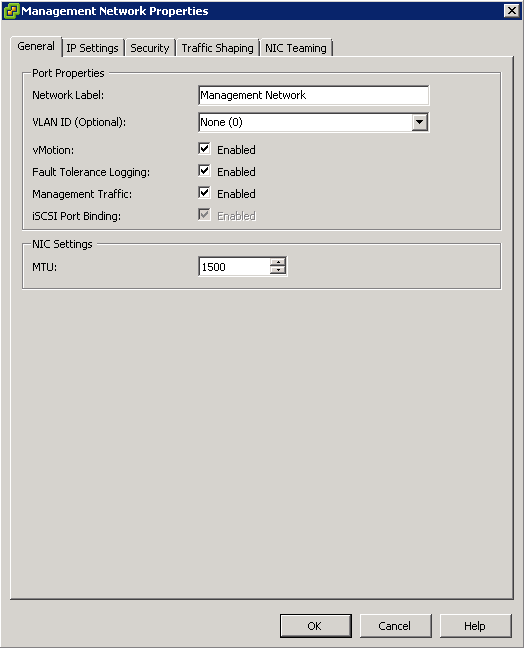

On each host that you want to add to a vSphere HA cluster, you must configure two different networking switches so that the host can also support vSphere Fault Tolerance.

To enable Fault Tolerance for a host, you must complete this procedure twice, once for each port group option to ensure that sufficient bandwidth is available for Fault Tolerance logging. Select one option, finish this procedure, and repeat the procedure a second time, selecting the other port group option.

Prerequisites

- Multiple gigabit Network Interface Cards (NICs) are required. For each host supporting Fault Tolerance, you need a minimum of two physical gigabit NICs. For example, you need one dedicated to Fault Tolerance logging and one dedicated to vMotion.

- VMware recommends three or more NICs to ensure availability.

- The vMotion and FT logging NICs must be on different subnets

- IPv6 is not supported on the FT logging NIC.

Procedure

- Connect vSphere Client to vCenter Server.

- In the vCenter Server inventory, select the host and click the Configuration tab.

- Select Networking under Hardware, and click the Add Networking link

- The Add Network wizard appears.

- Select VMkernel under Connection Types and click Next.

- Select Create a virtual switch and click Next.

- Provide a label for the switch.

- Select either Use this port group for vMotion or Use this port group for Fault Tolerance logging and click Next.

- Provide an IP address and subnet mask and click Next.

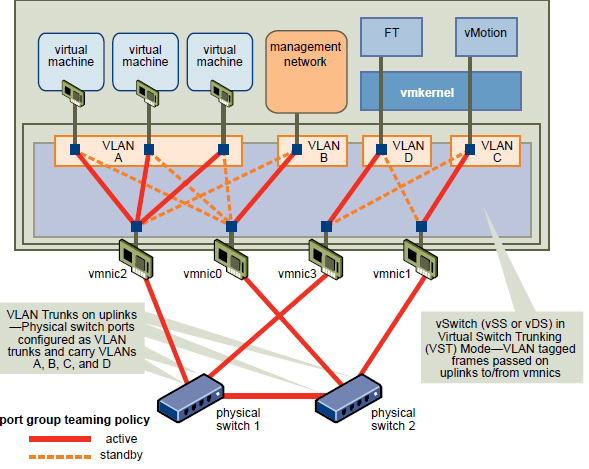

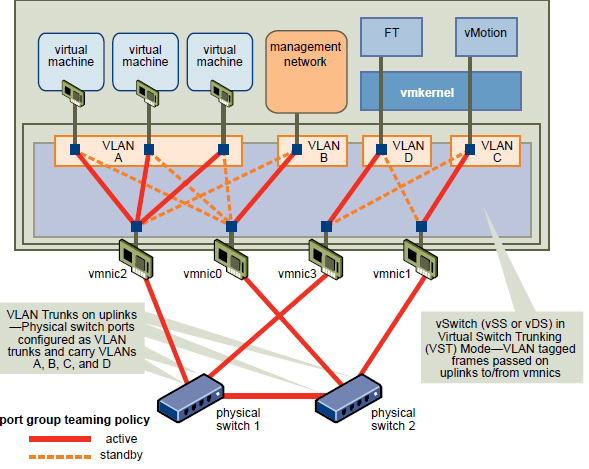

Networking Example

vMotion and FT Logging can share the same VLAN (configure the same VLAN number in both port groups), but require their own unique IP addresses residing in different IP subnets. However, separate VLANs might be preferred if Quality of Service (QoS) restrictions are in effect on the physical network with VLAN based QoS. QoS is of particular use where competing traffic comes into play, for example, where multiple physical switch hops are used or when a failover occurs and multiple traffic types compete for network resources.

This example uses four port groups configured as follows:

- VLAN A: Virtual Machine Network Port Group-active on vmnic2 (to physical switch #1); standby on vmnic0 (to physical switch #2.)

- VLAN B: Management Network Port Group-active on vmnic0 (to physical switch #2); standby on vmnic2 (to physical switch #1.)

- VLAN C: vMotion Port Group-active on vmnic1 (to physical switch #2); standby on vmnic3 (to physical switch #1.)

- VLAN D: FT Logging Port Group-active on vmnic3 (to physical switch #1); standby on vmnic1 (to physical switch #2.)

Instructions for setup

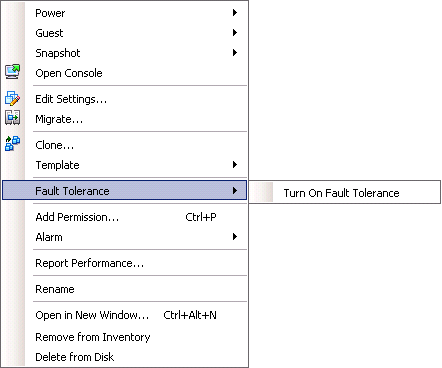

- Connect to vCenter using the vClient or Web Client

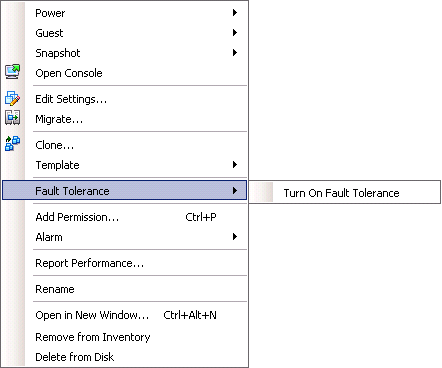

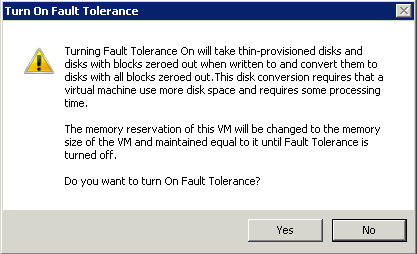

- Right click the VM you want to use for FT and select Fault Tolerance > Turn on Fault Tolerance

- You will get a message as per below

vSphere Fault Tolerance Configuration Recommendations

VMware recommends that you observe certain guidelines when configuring Fault Tolerance.

- In addition to non-fault tolerant virtual machines, you should have no more than four fault tolerant virtual machines (primaries or secondaries) on any single host. The number of fault tolerant virtual machines that you can safely run on each host is based on the sizes and workloads of the ESXi host and virtual machines, all of which can vary.

- If you are using NFS to access shared storage, use dedicated NAS hardware with at least a 1Gbit NIC to obtain the network performance required for Fault Tolerance to work properly.

- Ensure that a resource pool containing fault tolerant virtual machines has excess memory above the memory size of the virtual machines. The memory reservation of a fault tolerant virtual machine is set to the virtual machine’s memory size when Fault Tolerance is turned on. Without this excess in the resource pool, there might not be any memory available to use as overhead memory.

- Use a maximum of 16 virtual disks per fault tolerant virtual machine.

- To ensure redundancy and maximum Fault Tolerance protection, you should have a minimum of three hosts in the cluster. In a failover situation, this provides a host that can accommodate the new Secondary VM that is created.